- n.

- 結石、石。歯石

WordNet

- a hard lump produced by the concretion of mineral salts; found in hollow organs or ducts of the body; "renal calculi can be very painful" (同)concretion

- the branch of mathematics that is concerned with limits and with the differentiation and integration of functions (同)infinitesimal_calculus

- of any of various dull tannish or grey colors

- the hard inner (usually woody) layer of the pericarp of some fruits (as peaches or plums or cherries or olives) that contains the seed; "you should remove the stones from prunes before cooking" (同)pit, endocarp

- an avoirdupois unit used to measure the weight of a human body; equal to 14 pounds; "a heavy chap who must have weighed more than twenty stone"

- a lack of feeling or expression or movement; "he must have a heart of stone"; "her face was as hard as stone"

- building material consisting of a piece of rock hewn in a definite shape for a special purpose; "he wanted a special stone to mark the site"

- kill by throwing stones at; "People wanted to stone the woman who had a child out of wedlock" (同)lapidate

- an incrustation that forms on the teeth and gums (同)calculus, tophus

- the part of calculus that deals with integration and its application in the solution of differential equations and in determining areas or volumes etc.

PrepTutorEJDIC

- 〈C〉(腎臓内などにできる)結石 / 〈U〉微積分学

- 〈U〉(物質としての)『石』,石材 / 〈C〉『小石』,岩石の小片 / 〈C〉《複合語を作って》(特定の目的に用いる)石材 / =precious stone / 〈C〉(形・堅さが)石に似たもの(あられなど) / 〈C〉(腎臓・膀胱(ぼうこう)などの)結石 / 〈C〉(果実の)種,核 / 〈C〉《英》(体重を表す重量単位の)ストーン(14ポンド(約5.35キログラム)に相当) / 『石造りの』,石の・石器製の / …‘に'石を投げる,石を投げて殺す / …‘に'石を取り付ける(張る,敷く),沿って石を並べる / 〈果実〉‘の'種を取る

- 酒石(ぶどう酒が発酵する時にたるの内側に沈殿する) / 歯石

Wikipedia preview

出典(authority):フリー百科事典『ウィキペディア(Wikipedia)』「2014/07/31 11:58:29」(JST)

wiki ja

| この記事は検証可能な参考文献や出典が全く示されていないか、不十分です。 出典を追加して記事の信頼性向上にご協力ください。(2012年9月) |

| この記事には独自研究が含まれているおそれがあります。問題箇所を検証し出典を追加して、記事の改善にご協力ください。議論はノートを参照してください。(2012年9月) |

微分積分学(びぶんせきぶんがく, calculus)とは、解析学の基本的な部分を形成する数学の一分野である。微分積分学は、局所的な変化を捉える微分と局所的な量の大域的な集積を扱う積分の二本の柱からなり、分野としての範囲を確定するのは難しいが、大体多変数実数値関数の微分と積分に関わる事柄(逆関数定理やベクトル解析も)を含んでいる。

微分は、ある関数のある点での接線、或いは接平面を考える演算である。数学的に別の言い方をすると、基本的には複雑な関数を線型近似して捉えようとする考え方である。従って、微分は線型写像になる。(但し多変数関数の微分を線型写像として捉える考え方は 20世紀に入ってからのものである)。微分方程式はこの考え方の自然な延長にある。

対して積分は、幾何学的には、曲線、あるいは曲面と座標軸とに挟まれた領域の面積(体積)を求めることに相当している。ベルンハルト・リーマンは(一変数の)定積分の値を、長方形近似の極限として直接的に定義し、連続関数は積分を有することなどを証明した。彼の定義による積分をリーマン積分と呼んでいる。

微分と積分はまったく別の概念でありながら密接な関連性を持ち、一変数の場合、互いに他の逆演算としての意味を持っている。(微分積分学の基本定理)

目次

- 1 歴史

- 1.1 古代

- 1.2 中世

- 1.3 近代

- 1.4 重要性

- 2 脚注・出典

- 3 関連項目

歴史

「解析学#歴史」も参照

古代

古代にもいくつかの積分法のアイデアは存在したが、厳密あるいは体系的な方法でそれらのアイデアを発展させようという動きは見られない。積分法の基本的機能である体積や面積の計算は、エジプトのモスクワパピルス(紀元前1820年頃)まで遡り、その中で角錐の切頭体の体積を正しく求めている[1][2]。ギリシア数学では、エウドクソス(紀元前408年 - 355年頃)が極限の概念の先駆けとなる取り尽くし法で面積や体積を計算し、アルキメデス(紀元前287年 - 212年頃)がそれを発展させて積分法によく似たヒューリスティクスを考案した[3]。取り尽くし法は紀元3世紀ごろ、中国の劉徽も円の面積を求めるのに使っている。5世紀には祖沖之が後にカヴァリエリの原理と呼ばれるようになる方法を使って球の体積を求めた[2]。

中世

紀元1000年ごろ、イスラムの数学者イブン・アル・ハイサムが等差数列の4乗(すなわち二重平方数)の総和の公式を導き出し、それを任意の整数の冪乗の和に一般化し、積分の基礎を築いた[4]。11世紀の中国の博学者沈括は積分に使える充填公式を考案した。12世紀のインドの数学者バースカラ2世は極微の変化を表す微分法の先駆けとなる手法を考案し、ロルの定理の原始的形式も記述している[5]。同じく12世紀のペルシア人数学者 Sharaf al-Dīn al-Tūsī は三次関数の微分法を発見し、微分学に重要な貢献をしている[6]。14世紀インドのサンガマグラマのマーダヴァ(Madhava of Sangamagrama)は自らが設立した数学と天文学の学校の学生達(ケーララ学派)と共にテイラー級数の特殊ケースを明らかにし[7]、それを Yuktibhasa という教科書に掲載した[8][9][10]。

近代

近代になると、17世紀初頭の日本で独自に微分積分学に関する発見が見られる。これは関孝和らの和算であり、やはり取り尽くし法を基礎として発展させたものである[要出典]。

ヨーロッパでは、ボナヴェントゥーラ・カヴァリエーリが極微の領域の面積や体積の総和として面積や体積を求める方法を論文で論じ、微分積分学の基礎を築いた。これはアルキメデスの方法とよく似ているが、その論文は20世紀初頭まで見つからなかった[要出典]。カヴァリエーリの手法では微分の性質の捉えかたに間違いがあり、計算結果が間違っていたため、あまり尊重されなかった[要出典]。

微積分の定式化の研究により、カヴァリエーリの微分と、同じ頃ヨーロッパで生まれた有限差分法が組み合わされるようになる。この統合を行ったのがジョン・ウォリス、アイザック・バロー、ジェームズ・グレゴリーであり、バローとグレゴリーは1675年ごろ微分積分学の基本定理の第2定理を証明した。

アイザック・ニュートンは、積の微分法則、連鎖律、高階微分の記法、テイラー級数、解析関数といった概念を独特の記法で導入し、それらを数理物理学の問題を解くのに使った。ニュートンは出版する際に、当時の数学用語に合わせて微分計算を等価な幾何学的主題に置き換えて非難を受けないようにした。ニュートンは微分積分学の手法を使い、天体の軌道、回転流体の表面の形、地球の偏平率、サイクロイド曲線上をすべる錘の動きなど、様々な問題について『自然哲学の数学的諸原理』の中で論じている。ニュートンはそれとは別に関数の級数展開を発展させており、テイラー級数の原理を理解していたことが明らかである。ニュートンは自身が発見したことを全て出版したわけではなく、当時はまだ微分法はまともな数学とは見なされていなかった。

これらの考え方を体系化し、微分積分学を学問として確立させたのがゴットフリート・ライプニッツだが、当時はニュートンの盗作だと非難された。現在では、独自に微分積分学を確立し発展させた1人と認められている。ライプニッツは極小の量を操作する規則を明確に規定し、二次および高次の導関数の計算を可能とし、ライプニッツ則と連鎖律を規定した。ニュートンとは異なり、ライプニッツは形式主義に大いに気を使い、それぞれの概念をどういう記号で表すかで何日も悩んだという。

ライプニッツとニュートンの2人が一般に微分積分学を確立したとされている。ニュートンは物理学全般に微分積分学を適用するということを初めて行い、ライプニッツは今日も使われている微分積分学の記法を開発した。2人に共通する基本的洞察は、微分と積分の法則、二次および高次の導関数、多項式級数を近似する記法である。ニュートンの時代までには、微分積分学の基本定理は既に知られていた。

ニュートンとライプニッツがそれぞれの成果を出版したとき、どちら(すなわちどちらの国)が賞賛に値するのかという大きな論争が発生した。成果を得たのはニュートンが先だが、出版はライプニッツが先だった[11]。ニュートンは発表前の論文を王立協会の数名の会員に渡していたことから、ライプニッツがその未発表の論文からアイデアを盗用したと主張した[要出典]。この論争により、英国数学界とヨーロッパ大陸の数学界の仲が険悪になり、その状態が何年も続いた[12]。ライプニッツとニュートンの論文を慎重に精査したところ、ライプニッツは積分から論を構築し、ニュートンは微分から論を構築していることから、それぞれ独自に結論に到達していることが判明した[要出典]。現在では、ニュートンとライプニッツがそれぞれ独自に微分積分学を確立したとされている。ただし、この新しい学問に "calculus" という名前を付けたのはライプニッツである。ニュートンは "the science of fluxions" と呼んでいた[要出典]。

この時代、他にも多数の数学者が微分積分学の発展に貢献している。19世紀になると微分積分学にはさらに厳密な数学的基礎が与えられた。それには、コーシー、リーマン、ワイエルシュトラウス(ε-δ論法)らが貢献している。また、同時期に微分積分学の考え方がユークリッド空間と複素平面に拡張された。ルベーグは事実上任意の関数が積分を持てるよう積分の記法を拡張し、ローラン・シュワルツが微分を同様に拡張した。

今日では、微分積分学は世界中の高校や大学で教えられている[13]。

重要性

微分積分学の考え方の一部は、ギリシア、中国、インド、イラク、ペルシャ、日本で早くから発展していたが、現代に通じる微分積分学は、17世紀のヨーロッパで、アイザック・ニュートンとゴットフリート・ライプニッツが先人の業績を踏まえて確立したものである[要出典]。微分積分学は、曲線の下の面積を求める問題と動きを瞬間的に捉えるという問題を考えてきた先人の成果の上に成り立っている。

近代に入ると微分積分は弾道学と共に発展してきた一面もある、砲弾の速度や弾道曲線の計算に用いられてきた、微分計算を行う機械式計算機の多くは弾道計算のために作られてきた歴史があり、世界初のコンピューターも弾道計算を行うための微分方程式を計算するためのものだった。また、大砲の強度計算や、火薬の爆発や挙動の計算にも微分積分は必須であり、火砲の歴史とは密接な関係がある。

微分法の用途としては、速度や加速度に関わる計算、曲線の接線の傾きの計算、最適化問題の計算などがある。積分法の用途としては、面積、体積、曲線の長さ、重心、仕事、圧力などの計算がある。さらに高度な応用として冪級数とフーリエ級数がある。微分積分学は、シャトルが宇宙ステーションとドッキングする際の軌道計算や、道路上の積雪量の計算などにも使うことができる。

微分積分学は、宇宙や時間や運動の性質をより正確に理解するのにも使われる。数学者と哲学者は数世紀にわたり、ゼロ除算や無数の数の総和に関わるパラドックスについて論争してきた。それらの問題は、運動と面積の研究過程で生じた。古代ギリシアの哲学者ゼノンは、ゼノンのパラドックスのような有名な例を示している[要出典]。微分積分学、特に極限と無限級数を使えば、それらのパラドックスを解決することができる。

脚注・出典

- ^ どのようにして正解を導いたのかは明らかでない。モリス・クライン (Mathematical thought from ancient to modern times Vol. I) は試行錯誤の結果ではないかと示唆している。

- ^ a b Helmer Aslaksen. Why Calculus? National University of Singapore.

- ^ Archimedes, Method, in The Works of Archimedes ISBN 978-0-521-66160-7

- ^ Victor J. Katz (1995). "Ideas of Calculus in Islam and India", Mathematics Magazine 68 (3), pp. 163-174.

- ^ Ian G. Pearce. Bhaskaracharya II.

- ^ J. L. Berggren (1990). "Innovation and Tradition in Sharaf al-Din al-Tusi's Muadalat", Journal of the American Oriental Society 110 (2), pp. 304-309.

- ^ “Madhava”. Biography of Madhava. School of Mathematics and Statistics University of St Andrews, Scotland. 2006年9月13日閲覧。

- ^ “An overview of Indian mathematics”. Indian Maths. School of Mathematics and Statistics University of St Andrews, Scotland. 2006年7月7日閲覧。

- ^ “Science and technology in free India (PDF)”. Government of Kerala — Kerala Call, September 2004. Prof.C.G.Ramachandran Nair. 2006年7月9日閲覧。

- ^ Charles Whish (1835). Transactions of the Royal Asiatic Society of Great Britain and Ireland.

- ^ 矢沢サイエンスオフィス 『大科学論争』 学習研究社〈最新科学論シリーズ〉、1998年、119頁。ISBN 4-05-601993-2。

- ^ 矢沢サイエンスオフィス 『大科学論争』 学習研究社〈最新科学論シリーズ〉、1998年、123~125頁。ISBN 4-05-601993-2。

- ^ UNESCO-World Data on Education [1]

関連項目

- アイザック・ニュートン

- ゴットフリート・ライプニッツ

- 関孝和

- 分数階微積分学

|

|||||||||||||

wiki en

| This article includes a list of references, but its sources remain unclear because it has insufficient inline citations. Please help to improve this article by introducing more precise citations. (December 2013) |

| Calculus | ||||||

|---|---|---|---|---|---|---|

|

||||||

|

Differential

|

||||||

|

Integral

|

||||||

|

Series

|

||||||

|

Vector

|

||||||

|

Multivariable

|

||||||

|

Specialized

|

||||||

|

Calculus is the mathematical study of change,[1] in the same way that geometry is the study of shape and algebra is the study of operations and their application to solving equations. It has two major branches, differential calculus (concerning rates of change and slopes of curves), and integral calculus (concerning accumulation of quantities and the areas under and between curves); these two branches are related to each other by the fundamental theorem of calculus. Both branches make use of the fundamental notions of convergence of infinite sequences and infinite series to a well-defined limit. Generally considered to have been founded in the 17th century by Isaac Newton and Gottfried Leibniz, today calculus has widespread uses in science, engineering and economics and can solve many problems that algebra alone cannot.

Calculus is a part of modern mathematics education. A course in calculus is a gateway to other, more advanced courses in mathematics devoted to the study of functions and limits, broadly called mathematical analysis. Calculus has historically been called "the calculus of infinitesimals", or "infinitesimal calculus". The word "calculus" comes from Latin (calculus) and refers to a small stone used for counting. More generally, calculus (plural calculi) refers to any method or system of calculation guided by the symbolic manipulation of expressions. Some examples of other well-known calculi are propositional calculus, calculus of variations, lambda calculus, and process calculus.

Contents

- 1 History

- 1.1 Ancient

- 1.2 Medieval

- 1.3 Modern

- 1.4 Foundations

- 1.5 Significance

- 2 Principles

- 2.1 Limits and infinitesimals

- 2.2 Differential calculus

- 2.3 Leibniz notation

- 2.4 Integral calculus

- 2.5 Fundamental theorem

- 3 Applications

- 4 Varieties

- 4.1 Non-standard calculus

- 4.2 Smooth infinitesimal analysis

- 4.3 Constructive analysis

- 5 See also

- 5.1 Lists

- 5.2 Other related topics

- 6 References

- 6.1 Notes

- 6.2 Books

- 7 Other resources

- 7.1 Further reading

- 7.2 Online books

- 8 External links

History

Modern calculus was developed in 17th century Europe by Isaac Newton and Gottfried Wilhelm Leibniz, but elements of it have appeared in ancient Greece, China, medieval Europe, India, and the Middle East.

Ancient

The ancient period introduced some of the ideas that led to integral calculus, but does not seem to have developed these ideas in a rigorous and systematic way. Calculations of volume and area, one goal of integral calculus, can be found in the Egyptian Moscow papyrus (c. 1820 BC), but the formulas are simple instructions, with no indication as to method, and some of them lack major components.[2] From the age of Greek mathematics, Eudoxus (c. 408−355 BC) used the method of exhaustion, which foreshadows the concept of the limit, to calculate areas and volumes, while Archimedes (c. 287−212 BC) developed this idea further, inventing heuristics which resemble the methods of integral calculus.[3] The method of exhaustion was later reinvented in China by Liu Hui in the 3rd century AD in order to find the area of a circle.[4] In the 5th century AD, Zu Chongzhi established a method that would later be called Cavalieri's principle to find the volume of a sphere.[5]

Medieval

Alexander the Great's invasion of northern India brought Greek trigonometry, using the chord, to India where the sine, cosine, and tangent were conceived. Indian mathematicians gave a semi-rigorous method of differentiation of some trigonometric functions. In the Middle East, Alhazen derived a formula for the sum of fourth powers. He used the results to carry out what would now be called an integration, where the formulas for the sums of integral squares and fourth powers allowed him to calculate the volume of a paraboloid.[6] In the 14th century, Indian mathematician Madhava of Sangamagrama and the Kerala school of astronomy and mathematics stated components of calculus such as the Taylor series and infinite series approximations.[7] However, they were not able to "combine many differing ideas under the two unifying themes of the derivative and the integral, show the connection between the two, and turn calculus into the great problem-solving tool we have today".[6]

Modern

| "The calculus was the first achievement of modern mathematics and it is difficult to overestimate its importance. I think it defines more unequivocally than anything else the inception of modern mathematics, and the system of mathematical analysis, which is its logical development, still constitutes the greatest technical advance in exact thinking." —John von Neumann[8] |

In Europe, the foundational work was a treatise due to Bonaventura Cavalieri, who argued that volumes and areas should be computed as the sums of the volumes and areas of infinitesimally thin cross-sections. The ideas were similar to Archimedes' in The Method, but this treatise was lost until the early part of the twentieth century. Cavalieri's work was not well respected since his methods could lead to erroneous results, and the infinitesimal quantities he introduced were disreputable at first.

The formal study of calculus brought together Cavalieri's infinitesimals with the calculus of finite differences developed in Europe at around the same time. Pierre de Fermat, claiming that he borrowed from Diophantus, introduced the concept of adequality, which represented equality up to an infinitesimal error term.[9] The combination was achieved by John Wallis, Isaac Barrow, and James Gregory, the latter two proving the second fundamental theorem of calculus around 1670.

The product rule and chain rule, the notion of higher derivatives, Taylor series, and analytical functions were introduced by Isaac Newton in an idiosyncratic notation which he used to solve problems of mathematical physics.[10] In his works, Newton rephrased his ideas to suit the mathematical idiom of the time, replacing calculations with infinitesimals by equivalent geometrical arguments which were considered beyond reproach. He used the methods of calculus to solve the problem of planetary motion, the shape of the surface of a rotating fluid, the oblateness of the earth, the motion of a weight sliding on a cycloid, and many other problems discussed in his Principia Mathematica (1687). In other work, he developed series expansions for functions, including fractional and irrational powers, and it was clear that he understood the principles of the Taylor series. He did not publish all these discoveries, and at this time infinitesimal methods were still considered disreputable.

These ideas were arranged into a true calculus of infinitesimals by Gottfried Wilhelm Leibniz, who was originally accused of plagiarism by Newton.[11] He is now regarded as an independent inventor of and contributor to calculus. His contribution was to provide a clear set of rules for working with infinitesimal quantities, allowing the computation of second and higher derivatives, and providing the product rule and chain rule, in their differential and integral forms. Unlike Newton, Leibniz paid a lot of attention to the formalism, often spending days determining appropriate symbols for concepts.

Leibniz and Newton are usually both credited with the invention of calculus. Newton was the first to apply calculus to general physics and Leibniz developed much of the notation used in calculus today. The basic insights that both Newton and Leibniz provided were the laws of differentiation and integration, second and higher derivatives, and the notion of an approximating polynomial series. By Newton's time, the fundamental theorem of calculus was known.

When Newton and Leibniz first published their results, there was great controversy over which mathematician (and therefore which country) deserved credit. Newton derived his results first (later to be published in his Method of Fluxions), but Leibniz published his Nova Methodus pro Maximis et Minimis first. Newton claimed Leibniz stole ideas from his unpublished notes, which Newton had shared with a few members of the Royal Society. This controversy divided English-speaking mathematicians from continental mathematicians for many years, to the detriment of English mathematics. A careful examination of the papers of Leibniz and Newton shows that they arrived at their results independently, with Leibniz starting first with integration and Newton with differentiation. Today, both Newton and Leibniz are given credit for developing calculus independently. It is Leibniz, however, who gave the new discipline its name. Newton called his calculus "the science of fluxions".

Since the time of Leibniz and Newton, many mathematicians have contributed to the continuing development of calculus. One of the first and most complete works on finite and infinitesimal analysis was written in 1748 by Maria Gaetana Agnesi.[12]

Foundations

In calculus, foundations refers to the rigorous development of a subject from precise axioms and definitions. In early calculus the use of infinitesimal quantities was thought unrigorous, and was fiercely criticized by a number of authors, most notably Michel Rolle and Bishop Berkeley. Berkeley famously described infinitesimals as the ghosts of departed quantities in his book The Analyst in 1734. Working out a rigorous foundation for calculus occupied mathematicians for much of the century following Newton and Leibniz, and is still to some extent an active area of research today.

Several mathematicians, including Maclaurin, tried to prove the soundness of using infinitesimals, but it would not be until 150 years later when, due to the work of Cauchy and Weierstrass, a way was finally found to avoid mere "notions" of infinitely small quantities.[13] The foundations of differential and integral calculus had been laid. In Cauchy's writing (see Cours d'Analyse), we find a broad range of foundational approaches, including a definition of continuity in terms of infinitesimals, and a (somewhat imprecise) prototype of an (ε, δ)-definition of limit in the definition of differentiation. In his work Weierstrass formalized the concept of limit and eliminated infinitesimals. Following the work of Weierstrass, it eventually became common to base calculus on limits instead of infinitesimal quantities. Though the subject is still occasionally called "infinitesimal calculus". Bernhard Riemann used these ideas to give a precise definition of the integral. It was also during this period that the ideas of calculus were generalized to Euclidean space and the complex plane.

In modern mathematics, the foundations of calculus are included in the field of real analysis, which contains full definitions and proofs of the theorems of calculus. The reach of calculus has also been greatly extended. Henri Lebesgue invented measure theory and used it to define integrals of all but the most pathological functions. Laurent Schwartz introduced distributions, which can be used to take the derivative of any function whatsoever.

Limits are not the only rigorous approach to the foundation of calculus. Another way is to use Abraham Robinson's non-standard analysis. Robinson's approach, developed in the 1960s, uses technical machinery from mathematical logic to augment the real number system with infinitesimal and infinite numbers, as in the original Newton-Leibniz conception. The resulting numbers are called hyperreal numbers, and they can be used to give a Leibniz-like development of the usual rules of calculus.

Significance

While many of the ideas of calculus had been developed earlier in Egypt, Greece, China, India, Iraq, Persia, and Japan, the use of calculus began in Europe, during the 17th century, when Isaac Newton and Gottfried Wilhelm Leibniz built on the work of earlier mathematicians to introduce its basic principles. The development of calculus was built on earlier concepts of instantaneous motion and area underneath curves.

Applications of differential calculus include computations involving velocity and acceleration, the slope of a curve, and optimization. Applications of integral calculus include computations involving area, volume, arc length, center of mass, work, and pressure. More advanced applications include power series and Fourier series.

Calculus is also used to gain a more precise understanding of the nature of space, time, and motion. For centuries, mathematicians and philosophers wrestled with paradoxes involving division by zero or sums of infinitely many numbers. These questions arise in the study of motion and area. The ancient Greek philosopher Zeno of Elea gave several famous examples of such paradoxes. Calculus provides tools, especially the limit and the infinite series, which resolve the paradoxes.

Principles

Limits and infinitesimals

Calculus is usually developed by working with very small quantities. Historically, the first method of doing so was by infinitesimals. These are objects which can be treated like numbers but which are, in some sense, "infinitely small". An infinitesimal number dx could be greater than 0, but less than any number in the sequence 1, 1/2, 1/3, ... and less than any positive real number. Any integer multiple of an infinitesimal is still infinitely small, i.e., infinitesimals do not satisfy the Archimedean property. From this point of view, calculus is a collection of techniques for manipulating infinitesimals. This approach fell out of favor in the 19th century because it was difficult to make the notion of an infinitesimal precise. However, the concept was revived in the 20th century with the introduction of non-standard analysis and smooth infinitesimal analysis, which provided solid foundations for the manipulation of infinitesimals.

In the 19th century, infinitesimals were replaced by the epsilon, delta approach to limits. Limits describe the value of a function at a certain input in terms of its values at nearby input. They capture small-scale behavior in the context of the real number system. In this treatment, calculus is a collection of techniques for manipulating certain limits. Infinitesimals get replaced by very small numbers, and the infinitely small behavior of the function is found by taking the limiting behavior for smaller and smaller numbers. Limits are the easiest way to provide rigorous foundations for calculus, and for this reason they are the standard approach.

Differential calculus

Differential calculus is the study of the definition, properties, and applications of the derivative of a function. The process of finding the derivative is called differentiation. Given a function and a point in the domain, the derivative at that point is a way of encoding the small-scale behavior of the function near that point. By finding the derivative of a function at every point in its domain, it is possible to produce a new function, called the derivative function or just the derivative of the original function. In mathematical jargon, the derivative is a linear operator which inputs a function and outputs a second function. This is more abstract than many of the processes studied in elementary algebra, where functions usually input a number and output another number. For example, if the doubling function is given the input three, then it outputs six, and if the squaring function is given the input three, then it outputs nine. The derivative, however, can take the squaring function as an input. This means that the derivative takes all the information of the squaring function—such as that two is sent to four, three is sent to nine, four is sent to sixteen, and so on—and uses this information to produce another function. (The function it produces turns out to be the doubling function.)

The most common symbol for a derivative is an apostrophe-like mark called prime. Thus, the derivative of the function of f is f′, pronounced "f prime." For instance, if f(x) = x2 is the squaring function, then f′(x) = 2x is its derivative, the doubling function.

If the input of the function represents time, then the derivative represents change with respect to time. For example, if f is a function that takes a time as input and gives the position of a ball at that time as output, then the derivative of f is how the position is changing in time, that is, it is the velocity of the ball.

If a function is linear (that is, if the graph of the function is a straight line), then the function can be written as y = mx + b, where x is the independent variable, y is the dependent variable, b is the y-intercept, and:

This gives an exact value for the slope of a straight line. If the graph of the function is not a straight line, however, then the change in y divided by the change in x varies. Derivatives give an exact meaning to the notion of change in output with respect to change in input. To be concrete, let f be a function, and fix a point a in the domain of f. (a, f(a)) is a point on the graph of the function. If h is a number close to zero, then a + h is a number close to a. Therefore (a + h, f(a + h)) is close to (a, f(a)). The slope between these two points is

This expression is called a difference quotient. A line through two points on a curve is called a secant line, so m is the slope of the secant line between (a, f(a)) and (a + h, f(a + h)). The secant line is only an approximation to the behavior of the function at the point a because it does not account for what happens between a and a + h. It is not possible to discover the behavior at a by setting h to zero because this would require dividing by zero, which is impossible. The derivative is defined by taking the limit as h tends to zero, meaning that it considers the behavior of f for all small values of h and extracts a consistent value for the case when h equals zero:

Geometrically, the derivative is the slope of the tangent line to the graph of f at a. The tangent line is a limit of secant lines just as the derivative is a limit of difference quotients. For this reason, the derivative is sometimes called the slope of the function f.

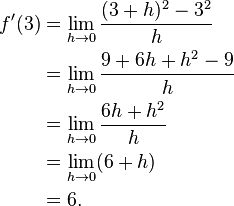

Here is a particular example, the derivative of the squaring function at the input 3. Let f(x) = x2 be the squaring function.

The slope of tangent line to the squaring function at the point (3,9) is 6, that is to say, it is going up six times as fast as it is going to the right. The limit process just described can be performed for any point in the domain of the squaring function. This defines the derivative function of the squaring function, or just the derivative of the squaring function for short. A similar computation to the one above shows that the derivative of the squaring function is the doubling function.

Leibniz notation

A common notation, introduced by Leibniz, for the derivative in the example above is

In an approach based on limits, the symbol dy/dx is to be interpreted not as the quotient of two numbers but as a shorthand for the limit computed above. Leibniz, however, did intend it to represent the quotient of two infinitesimally small numbers, dy being the infinitesimally small change in y caused by an infinitesimally small change dx applied to x. We can also think of d/dx as a differentiation operator, which takes a function as an input and gives another function, the derivative, as the output. For example:

In this usage, the dx in the denominator is read as "with respect to x". Even when calculus is developed using limits rather than infinitesimals, it is common to manipulate symbols like dx and dy as if they were real numbers; although it is possible to avoid such manipulations, they are sometimes notationally convenient in expressing operations such as the total derivative.

Integral calculus

Integral calculus is the study of the definitions, properties, and applications of two related concepts, the indefinite integral and the definite integral. The process of finding the value of an integral is called integration. In technical language, integral calculus studies two related linear operators.

The indefinite integral is the antiderivative, the inverse operation to the derivative. F is an indefinite integral of f when f is a derivative of F. (This use of lower- and upper-case letters for a function and its indefinite integral is common in calculus.)

The definite integral inputs a function and outputs a number, which gives the algebraic sum of areas between the graph of the input and the x-axis. The technical definition of the definite integral is the limit of a sum of areas of rectangles, called a Riemann sum.

A motivating example is the distances traveled in a given time.

If the speed is constant, only multiplication is needed, but if the speed changes, a more powerful method of finding the distance is necessary. One such method is to approximate the distance traveled by breaking up the time into many short intervals of time, then multiplying the time elapsed in each interval by one of the speeds in that interval, and then taking the sum (a Riemann sum) of the approximate distance traveled in each interval. The basic idea is that if only a short time elapses, then the speed will stay more or less the same. However, a Riemann sum only gives an approximation of the distance traveled. We must take the limit of all such Riemann sums to find the exact distance traveled.

When velocity is constant, the total distance traveled over the given time interval can be computed by multiplying velocity and time. For example, travelling a steady 50 mph for 3 hours results in a total distance of 150 miles. In the diagram on the left, when constant velocity and time are graphed, these two values form a rectangle with height equal to the velocity and width equal to the time elapsed. Therefore, the product of velocity and time also calculates the rectangular area under the (constant) velocity curve. This connection between the area under a curve and distance traveled can be extended to any irregularly shaped region exhibiting a fluctuating velocity over a given time period. If f(x) in the diagram on the right represents speed as it varies over time, the distance traveled (between the times represented by a and b) is the area of the shaded region s.

To approximate that area, an intuitive method would be to divide up the distance between a and b into a number of equal segments, the length of each segment represented by the symbol Δx. For each small segment, we can choose one value of the function f(x). Call that value h. Then the area of the rectangle with base Δx and height h gives the distance (time Δx multiplied by speed h) traveled in that segment. Associated with each segment is the average value of the function above it, f(x) = h. The sum of all such rectangles gives an approximation of the area between the axis and the curve, which is an approximation of the total distance traveled. A smaller value for Δx will give more rectangles and in most cases a better approximation, but for an exact answer we need to take a limit as Δx approaches zero.

The symbol of integration is , an elongated S (the S stands for "sum"). The definite integral is written as:

and is read "the integral from a to b of f-of-x with respect to x." The Leibniz notation dx is intended to suggest dividing the area under the curve into an infinite number of rectangles, so that their width Δx becomes the infinitesimally small dx. In a formulation of the calculus based on limits, the notation

is to be understood as an operator that takes a function as an input and gives a number, the area, as an output. The terminating differential, dx, is not a number, and is not being multiplied by f(x), although, serving as a reminder of the Δx limit definition, it can be treated as such in symbolic manipulations of the integral. Formally, the differential indicates the variable over which the function is integrated and serves as a closing bracket for the integration operator.

The indefinite integral, or antiderivative, is written:

Functions differing by only a constant have the same derivative, and it can be shown that the antiderivative of a given function is actually a family of functions differing only by a constant. Since the derivative of the function y = x2 + C, where C is any constant, is y′ = 2x, the antiderivative of the latter given by:

The unspecified constant C present in the indefinite integral or antiderivative is known as the constant of integration.

Fundamental theorem

The fundamental theorem of calculus states that differentiation and integration are inverse operations. More precisely, it relates the values of antiderivatives to definite integrals. Because it is usually easier to compute an antiderivative than to apply the definition of a definite integral, the fundamental theorem of calculus provides a practical way of computing definite integrals. It can also be interpreted as a precise statement of the fact that differentiation is the inverse of integration.

The fundamental theorem of calculus states: If a function f is continuous on the interval [a, b] and if F is a function whose derivative is f on the interval (a, b), then

Furthermore, for every x in the interval (a, b),

This realization, made by both Newton and Leibniz, who based their results on earlier work by Isaac Barrow, was key to the massive proliferation of analytic results after their work became known. The fundamental theorem provides an algebraic method of computing many definite integrals—without performing limit processes—by finding formulas for antiderivatives. It is also a prototype solution of a differential equation. Differential equations relate an unknown function to its derivatives, and are ubiquitous in the sciences.

Applications

Calculus is used in every branch of the physical sciences, actuarial science, computer science, statistics, engineering, economics, business, medicine, demography, and in other fields wherever a problem can be mathematically modeled and an optimal solution is desired. It allows one to go from (non-constant) rates of change to the total change or vice versa, and many times in studying a problem we know one and are trying to find the other.

Physics makes particular use of calculus; all concepts in classical mechanics and electromagnetism are related through calculus. The mass of an object of known density, the moment of inertia of objects, as well as the total energy of an object within a conservative field can be found by the use of calculus. An example of the use of calculus in mechanics is Newton's second law of motion: historically stated it expressly uses the term "rate of change" which refers to the derivative saying The rate of change of momentum of a body is equal to the resultant force acting on the body and is in the same direction. Commonly expressed today as Force = Mass × acceleration, it involves differential calculus because acceleration is the time derivative of velocity or second time derivative of trajectory or spatial position. Starting from knowing how an object is accelerating, we use calculus to derive its path.

Maxwell's theory of electromagnetism and Einstein's theory of general relativity are also expressed in the language of differential calculus. Chemistry also uses calculus in determining reaction rates and radioactive decay. In biology, population dynamics starts with reproduction and death rates to model population changes.

Calculus can be used in conjunction with other mathematical disciplines. For example, it can be used with linear algebra to find the "best fit" linear approximation for a set of points in a domain. Or it can be used in probability theory to determine the probability of a continuous random variable from an assumed density function. In analytic geometry, the study of graphs of functions, calculus is used to find high points and low points (maxima and minima), slope, concavity and inflection points.

Green's Theorem, which gives the relationship between a line integral around a simple closed curve C and a double integral over the plane region D bounded by C, is applied in an instrument known as a planimeter, which is used to calculate the area of a flat surface on a drawing. For example, it can be used to calculate the amount of area taken up by an irregularly shaped flower bed or swimming pool when designing the layout of a piece of property.

Discrete Green's Theorem, which gives the relationship between a double integral of a function around a simple closed rectangular curve C and a linear combination of the antiderivative's values at corner points along the edge of the curve, allows fast calculation of sums of values in rectangular domains. For example, it can be used to efficiently calculate sums of rectangular domains in images, in order to rapidly extract features and detect object - see also the summed area table algorithm.

In the realm of medicine, calculus can be used to find the optimal branching angle of a blood vessel so as to maximize flow. From the decay laws for a particular drug's elimination from the body, it is used to derive dosing laws. In nuclear medicine, it is used to build models of radiation transport in targeted tumor therapies.

In economics, calculus allows for the determination of maximal profit by providing a way to easily calculate both marginal cost and marginal revenue.

Calculus is also used to find approximate solutions to equations; in practice it is the standard way to solve differential equations and do root finding in most applications. Examples are methods such as Newton's method, fixed point iteration, and linear approximation. For instance, spacecraft use a variation of the Euler method to approximate curved courses within zero gravity environments.

Varieties

Over the years, many reformulations of calculus have been investigated for different purposes.

Non-standard calculus

Imprecise calculations with infinitesimals were widely replaced with the rigorous (ε, δ)-definition of limit starting in the 1870s. Meanwhile, calculations with infinitesimals persisted and often led to correct results. This led Abraham Robinson to investigate if were possible to develop a number system with infinitesimal quantities over which the theorems of calculus were still valid. In 1960, building upon the work of Edwin Hewitt and Jerzy Łoś, he succeeded in developing non-standard analysis. The theory of non-standard analysis is rich enough to be applied in many branches of mathematics. As such, books and articles dedicated solely to the traditional theorems of calculus often go by the title non-standard calculus.

Smooth infinitesimal analysis

This is an another reformulation of the calculus in terms of infinitesimals. Based on the ideas of F. W. Lawvere and employing the methods of category theory, it views all functions as being continuous and incapable of being expressed in terms of discrete entities. One aspect of this formulation is that the law of excluded middle does not hold in this formulation.

Constructive analysis

Constructive mathematics is a branch of mathematics that insists that proofs of the existence of a number, function, or other mathematical object should give a construction of the object. As such constructive mathematics also rejects the law of excluded middle. Reformulations of calculus in a constructive framework are generally part of the subject of constructive analysis.

See also

| Mathematics portal | |

| Analysis portal |

Lists

- List of calculus topics

- List of derivatives and integrals in alternative calculi

- List of differentiation identities

- Publications in calculus

- Table of integrals

Other related topics

- Calculus of finite differences

- Calculus with polynomials

- Complex analysis

- Differential equation

- Differential geometry

- Elementary Calculus: An Infinitesimal Approach

- Fourier series

- Integral equation

- Mathematical analysis

- Multivariable calculus

- Non-classical analysis

- Non-standard analysis

- Non-standard calculus

- Precalculus (mathematical education)

- Product integral

- Stochastic calculus

- Taylor series

References

Notes

- ^ Latorre, Donald R.; Kenelly, John W.; Reed, Iris B.; Biggers, Sherry (2007), Calculus Concepts: An Applied Approach to the Mathematics of Change, Cengage Learning, p. 2, ISBN 0-618-78981-2 , Chapter 1, p 2

- ^ Morris Kline, Mathematical thought from ancient to modern times, Vol. I

- ^ Archimedes, Method, in The Works of Archimedes ISBN 978-0-521-66160-7

- ^ Dun, Liu; Fan, Dainian; Cohen, Robert Sonné (1966). A comparison of Archimdes' and Liu Hui's studies of circles. Chinese studies in the history and philosophy of science and technology 130. Springer. p. 279. ISBN 0-7923-3463-9. ,Chapter , p. 279

- ^ Zill, Dennis G.; Wright, Scott; Wright, Warren S. (2009). Calculus: Early Transcendentals (3 ed.). Jones & Bartlett Learning. p. xxvii. ISBN 0-7637-5995-3. , Extract of page 27

- ^ a b Katz, V. J. 1995. "Ideas of Calculus in Islam and India." Mathematics Magazine (Mathematical Association of America), 68(3):163-174.

- ^ Indian mathematics

- ^ von Neumann, J., "The Mathematician", in Heywood, R. B., ed., The Works of the Mind, University of Chicago Press, 1947, pp. 180–196. Reprinted in Bródy, F., Vámos, T., eds., The Neumann Compedium, World Scientific Publishing Co. Pte. Ltd., 1995, ISBN 981-02-2201-7, pp. 618–626.

- ^ André Weil: Number theory. An approach through history. From Hammurapi to Legendre. Birkhauser Boston, Inc., Boston, MA, 1984, ISBN 0-8176-4565-9, p. 28.

- ^ Donald Allen: Calculus, http://www.math.tamu.edu/~dallen/history/calc1/calc1.html

- ^ Leibniz, Gottfried Wilhelm. The Early Mathematical Manuscripts of Leibniz. Cosimo, Inc., 2008. Page 228. Copy

- ^ Unlu, Elif (April 1995). "Maria Gaetana Agnesi". Agnes Scott College.

- ^ Russell, Bertrand (1946). History of Western Philosophy. London: George Allen & Unwin Ltd. p. 857. "The great mathematicians of the seventeenth century were optimistic and anxious for quick results; consequently they left the foundations of analytical geometry and the infinitesimal calculus insecure. Leibniz believed in actual infinitesimals, but although this belief suited his metaphysics it had no sound basis in mathematics. Weierstrass, soon after the middle of the nineteenth century, showed how to establish the calculus without infinitesimals, and thus at last made it logically secure. Next came Georg Cantor, who developed the theory of continuity and infinite number. "Continuity" had been, until he defined it, a vague word, convenient for philosophers like Hegel, who wished to introduce metaphysical muddles into mathematics. Cantor gave a precise significance to the word, and showed that continuity, as he defined it, was the concept needed by mathematicians and physicists. By this means a great deal of mysticism, such as that of Bergson, was rendered antiquated."

Books

- Larson, Ron, Bruce H. Edwards (2010). Calculus, 9th ed., Brooks Cole Cengage Learning. ISBN 978-0-547-16702-2

- McQuarrie, Donald A. (2003). Mathematical Methods for Scientists and Engineers, University Science Books. ISBN 978-1-891389-24-5

- Salas, Saturnino L.; Hille, Einar; Etgen, Garret J. (2006). Calculus: One and Several Variables (10th ed.). Wiley. ISBN 978-0-471-69804-3.

- Stewart, James (2008). Calculus: Early Transcendentals, 6th ed., Brooks Cole Cengage Learning. ISBN 978-0-495-01166-8

- Thomas, George B., Maurice D. Weir, Joel Hass, Frank R. Giordano (2008), Calculus, 11th ed., Addison-Wesley. ISBN 0-321-48987-X

Other resources

Further reading

- Boyer, Carl Benjamin (1949). The History of the Calculus and its Conceptual Development. Hafner. Dover edition 1959, ISBN 0-486-60509-4

- Courant, Richard ISBN 978-3-540-65058-4 Introduction to calculus and analysis 1.

- Edmund Landau. ISBN 0-8218-2830-4 Differential and Integral Calculus, American Mathematical Society.

- Robert A. Adams. (1999). ISBN 978-0-201-39607-2 Calculus: A complete course.

- Albers, Donald J.; Richard D. Anderson and Don O. Loftsgaarden, ed. (1986) Undergraduate Programs in the Mathematics and Computer Sciences: The 1985-1986 Survey, Mathematical Association of America No. 7.

- John Lane Bell: A Primer of Infinitesimal Analysis, Cambridge University Press, 1998. ISBN 978-0-521-62401-5. Uses synthetic differential geometry and nilpotent infinitesimals.

- Florian Cajori, "The History of Notations of the Calculus." Annals of Mathematics, 2nd Ser., Vol. 25, No. 1 (Sep., 1923), pp. 1–46.

- Leonid P. Lebedev and Michael J. Cloud: "Approximating Perfection: a Mathematician's Journey into the World of Mechanics, Ch. 1: The Tools of Calculus", Princeton Univ. Press, 2004.

- Cliff Pickover. (2003). ISBN 978-0-471-26987-8 Calculus and Pizza: A Math Cookbook for the Hungry Mind.

- Michael Spivak. (September 1994). ISBN 978-0-914098-89-8 Calculus. Publish or Perish publishing.

- Tom M. Apostol. (1967). ISBN 978-0-471-00005-1 Calculus, Volume 1, One-Variable Calculus with an Introduction to Linear Algebra. Wiley.

- Tom M. Apostol. (1969). ISBN 978-0-471-00007-5 Calculus, Volume 2, Multi-Variable Calculus and Linear Algebra with Applications. Wiley.

- Silvanus P. Thompson and Martin Gardner. (1998). ISBN 978-0-312-18548-0 Calculus Made Easy.

- Mathematical Association of America. (1988). Calculus for a New Century; A Pump, Not a Filter, The Association, Stony Brook, NY. ED 300 252.

- Thomas/Finney. (1996). ISBN 978-0-201-53174-9 Calculus and Analytic geometry 9th, Addison Wesley.

- Weisstein, Eric W. "Second Fundamental Theorem of Calculus." From MathWorld—A Wolfram Web Resource.

- Howard Anton,Irl Bivens,Stephen Davis:"Calculus",John Willey and Sons Pte. Ltd.,2002.ISBN 978-81-265-1259-1

Online books

- Boelkins, M. (2012). "Active Calculus: a free, open text". Retrieved 1 Feb 2013 from http://gvsu.edu/s/km

- Crowell, B. (2003). "Calculus" Light and Matter, Fullerton. Retrieved 6 May 2007 from http://www.lightandmatter.com/calc/calc.pdf

- Garrett, P. (2006). "Notes on first year calculus" University of Minnesota. Retrieved 6 May 2007 from http://www.math.umn.edu/~garrett/calculus/first_year/notes.pdf

- Faraz, H. (2006). "Understanding Calculus" Retrieved 6 May 2007 from Understanding Calculus, URL http://www.understandingcalculus.com/ (HTML only)

- Keisler, H. J. (2000). "Elementary Calculus: An Approach Using Infinitesimals" Retrieved 29 August 2010 from http://www.math.wisc.edu/~keisler/calc.html

- Mauch, S. (2004). "Sean's Applied Math Book" California Institute of Technology. Retrieved 6 May 2007 from http://www.cacr.caltech.edu/~sean/applied_math.pdf

- Sloughter, Dan (2000). "Difference Equations to Differential Equations: An introduction to calculus". Retrieved 17 March 2009 from http://synechism.org/drupal/de2de/

- Stroyan, K.D. (2004). "A brief introduction to infinitesimal calculus" University of Iowa. Retrieved 6 May 2007 from http://www.math.uiowa.edu/~stroyan/InfsmlCalculus/InfsmlCalc.htm (HTML only)

- Strang, G. (1991). "Calculus" Massachusetts Institute of Technology. Retrieved 6 May 2007 from http://ocw.mit.edu/ans7870/resources/Strang/strangtext.htm

- Smith, William V. (2001). "The Calculus" Retrieved 4 July 2008 [1] (HTML only).

External links

| Find more about Calculus at Wikipedia's sister projects | |

| Definitions and translations from Wiktionary | |

| Media from Commons | |

| Quotations from Wikiquote | |

| Source texts from Wikisource | |

| Textbooks from Wikibooks | |

| Learning resources from Wikiversity | |

- Weisstein, Eric W., "Calculus", MathWorld.

- Topics on Calculus at PlanetMath.org.

- Calculus Made Easy (1914) by Silvanus P. Thompson Full text in PDF

- Calculus on In Our Time at the BBC. (listen now)

- Calculus.org: The Calculus page at University of California, Davis – contains resources and links to other sites

- COW: Calculus on the Web at Temple University – contains resources ranging from pre-calculus and associated algebra

- Earliest Known Uses of Some of the Words of Mathematics: Calculus & Analysis

- Online Integrator (WebMathematica) from Wolfram Research

- The Role of Calculus in College Mathematics from ERICDigests.org

- OpenCourseWare Calculus from the Massachusetts Institute of Technology

- Infinitesimal Calculus – an article on its historical development, in Encyclopedia of Mathematics, ed. Michiel Hazewinkel.

- Calculus for Beginners and Artists by Daniel Kleitman, MIT

- Calculus Problems and Solutions by D. A. Kouba

- Donald Allen's notes on calculus

- Calculus training materials at imomath.com

- (English) (Arabic) The Excursion of Calculus, 1772

|

||||||||||||||||||

|

||||||||||||||||||||||||||

|

||||||||||||||

UpToDate Contents

全文を閲覧するには購読必要です。 To read the full text you will need to subscribe.

- 1. 成人における腎結石症が疑われた場合の診断および急性期マネージメント diagnosis and acute management of suspected nephrolithiasis in adults

- 2. 成人における腎尿管結石症マネージメントのオプション options in the management of renal and ureteral stones in adults

- 3. 尿管結石のマネージメント management of ureteral calculi

- 4. 成人における初発腎結石症および無症候性腎結石症 the first kidney stone and asymptomatic nephrolithiasis in adults

- 5. 小児における腎結石症の臨床的特徴および診断 clinical features and diagnosis of nephrolithiasis in children

English Journal

- Hyodeoxycholic acid protects the neurovascular unit against oxygen-glucose deprivation and reoxygenation-induced injury .

- Li CX, Wang XQ, Cheng FF, Yan X, Luo J, Wang QG.

- Neural regeneration research. 2019 Nov;14(11)1941-1949.

- Calculus bovis is commonly used for the treatment of stroke in traditional Chinese medicine. Hyodeoxycholic acid (HDCA) is a bioactive compound extracted from calculus bovis. When combined with cholic acid, baicalin and jas-minoidin, HDCA prevents hypoxia-reoxygenation-induced brain injury by suppre

- PMID 31290452

- Sialendoscopy plus laser lithotripsy in sialolithiasis of the submandibular gland in 64 patients: A simple and safe procedure.

- Guenzel T, Hoch S, Heinze N, Wilhelm T, Gueldner C, Franzen A, Coordes A, Lieder A, Wiegand S.

- Auris, nasus, larynx. 2019 Oct;46(5)797-802.

- To demonstrate the safety and efficiency of holmium laser-assisted lithotripsy during sialendoscopy of the submandibular gland using a retrospective, interventional consecutive case series. We performed 374 sialendoscopies between 2008 and 2015 and evaluated all patients regarding clinical symptoms,

- PMID 30765274

- Predictors of Nephrolithiasis, Osteoporosis, and Mortality in Primary Hyperparathyroidism.

- Reid LJ, Muthukrishnan B, Patel D, Seckl JR, Gibb FW.

- The Journal of clinical endocrinology and metabolism. 2019 Sep;104(9)3692-3700.

- Primary hyperparathyroidism (PHPT) has a prevalence of 0.86% and is associated with increased risk of nephrolithiasis and osteoporosis. PHPT may also be associated with increased risk of cardiovascular disease and mortality. To identify risk factors for nephrolithiasis, osteoporosis, and mortality i

- PMID 30916764

Japanese Journal

- ハイブリッドプロセス計算を用いたスーパーバイザ合成について (ソフトウェアサイエンス)

- 川北 悠人,結縁 祥治

- 電子情報通信学会技術研究報告 = IEICE technical report : 信学技報 115(20), 7-10, 2015-05-11

- NAID 40020492025

- A system of pair sentential calculus that has a representation of the Liar sentence

- 石井 忠夫

- 新潟国際情報大学情報文化学部紀要 1, 1-10, 2015-04-01

- … .holds, we will formalize a pair sentential calculus and represent the behavior of Liar sentence.To formalize the pair sentential calculus, we firstly introduce the stage numbers i, j in which each of pair-sentence holds, i.e., (Ai,Bj) and which means a situation of A at a stage i is referential to the situation of B at a stage j. …

- NAID 120005594457

- A NEW CLASS OF AMPLITUDE FUNCTIONS FOR THE STATIONARY PHASE METHOD ON AN ABSTRACT WIENER SPACE

- TANIGUCHI Setsuo

- Kyushu Journal of Mathematics 69(1), 219-228, 2015

- A stochastic oscillatory integral is a probabilistic counterpart to a Feynman path integral, and the stationary phase method for stochastic oscillatory integrals relates to the semi-classical limits i …

- NAID 130005076143

- TAP 1 day training:我国初のPNLトレーニングプログラムの試み

- 石戸 則孝,佐古 智子,横山 昌平 [他],塩塚 洋一,市川 孝治,山本 康雄,高本 均

- Japanese Journal of Endourology 28(1), 115-122, 2015

- 【緒言】我が国において,Percutaneous nephrolithotomy(PNLと略す)はその侵襲性により敬遠される傾向にあり,PNLのトレーニングを積む機会が少なくなっている.TULとPNLとを同時に行うTUL-assisted PNL(TAPと略す)はPNLのトレーニングツールとして活用できる可能性があるため,COOK社と共催で,倉敷成人病センターにおいてセミナー・手術見学・hand …

- NAID 130005073692

Related Links

- calculus 【名】 《病理》〔腎臓などの〕結石 《歯科》歯石 【同】tartar 《数学》微積分学 【同】infini...【発音】kǽlkjələs【カナ】キャルキュラス【変化】《複》calculi | calculuses - アルクがお届けするオンライン英和・和英辞書検索 ...

- calculusとは。意味や和訳。[名](複-li /-lài/,~・es)1 《数学》計算法differential [integral] calculus微[積]分法2 《病気》(腎臓などの)結石;歯石calculusの派生語calculous形《病気》結石の - 80万項目以上収録、例文 ...

- The branch of mathematics that finds the maximum or minimum values of functions by means of differentiation and integration. Calculus can be used to calculate such things as rates of change, the area bounded by curves, and the ...

Related Pictures

★リンクテーブル★

| リンク元 | 「tartar」「歯石」「calculi」「ureteral stone」「stone」 |

「tartar」

- n.

「歯石」

- 英

- dental tophus, dental calculus, odontolith, dental calculus、dental calculi、calculus、calculi、scale、tartar

- ラ

- calculus dentalis

- 関

- 歯垢

「calculi」

- np.

「ureteral stone」

- (病名)尿管結石

「stone」