PrepTutorEJDIC

- jet propulsion

Wikipedia preview

出典(authority):フリー百科事典『ウィキペディア(Wikipedia)』「2015/10/02 21:43:40」(JST)

wiki ja

花の写真をJPEGで圧縮したもの。左から右にいくに従って損失率が大きくなるようにしてある。 |

|

| 拡張子 | .jpeg, .jpg, .jpe |

|---|---|

| MIME Type | image/jpeg |

| タイプコード | JPEG |

| UTI | public.jpeg |

| マジックナンバー | \xff\xd8 |

| 開発者 | Joint Photographic Experts Group |

| 包含物 | 静止画像、テキスト |

| テンプレートを表示 | |

JPEG(ジェイペグ、Joint Photographic Experts Group)は、コンピュータなどで扱われる静止画像のデジタルデータを圧縮する方式のひとつ。またはそれをつくった組織 (ISO/IEC JTC 1/SC 29/WG 1, Joint Photographic Experts Group) の略称であり、アクロニムである。JPEG方式による画像ファイルにつけられる拡張子はjpgが多く使われるほか、jpeg等が使われる場合もある。

一般的に非可逆圧縮の画像フォーマットとして知られている。可逆圧縮形式もサポートしているが、可逆圧縮は特許などの関係でほとんど利用されていない。

標準では、特定の種類の画像の正式なフォーマットがなく、JFIF形式(マジックナンバー上は、6バイト目から始まる形式部分にJFIFと記されているもの)が事実上の標準ファイルフォーマットとなっている。動画を記録可能にしたものにMotion JPEGがある。立体視 (3D) 用には、ステレオJPEG (JPS) フォーマット[1]がある。

デジタルカメラの記録方式としてもよく利用されているが、デジタルカメラでは様々なオプション機能を使い、JFIFを拡張したExchangeable image file format (EXIF) などのフォーマットとしてまとめられている。

目次

- 1 技術の詳細

- 1.1 符号化方式

- 1.2 ノイズ

- 2 規格書

- 3 インターネットでの普及とその背景

- 4 出典

- 5 関連項目

- 6 外部リンク

技術の詳細

ここでは、一般に用いられる非可逆圧縮の方式について説明する。なお、JPEGの可逆圧縮には非可逆圧縮とは全く別の技術が用いられている。JPEG 2000ではどちらにも同じ技術を用いる。

符号化方式

JPEGでは、画像を固定サイズ(8×8画素)のブロックに分割し、そのブロック単位で、離散コサイン変換 (DCT: discrete cosine transform) を用いて、空間領域から周波数領域へ変換する。この変換自体では情報量は削減されない。変換されたデータは、量子化によって情報量を落としてから、ハフマン符号によるエントロピー符号化がなされ圧縮が行われる。エントロピー符号化とは、データの生起確率の高低に応じて異なる長さの符号を割り当てることで圧縮を行うものである。

DCTによる周波数領域への変換では、変換そのものでは情報量は削減されないが、低周波数成分にエネルギーが集まることを利用して、量子化による情報量削減と、エントロピー符号化での圧縮率向上を図っている。普通の画像をそのまま量子化してしまうと大きな画質劣化が生じるが、重要な成分が局所的に集められた後では元の画像の性質を残したまま量子化が可能である。また、低周波数成分に集中するという形で、データに偏りが生じると、エントロピー符号化の圧縮率も向上する。なお、JPEGでは、量子化マトリックスと呼ばれる係数表を用いて、低周波数成分に比べて高周波数成分でより粗い量子化を行うのが一般的である。

エントロピー符号化ではハフマン符号を用いる。ハフマン符号は処理が単純であるため演算量が少なく、さらにその符号セットが想定する、理想的なデータが入力された場合には極めて高い圧縮率を実現する。符号セットにあわないデータが入力された場合、逆に圧縮率は下がってしまう。この問題を解消するため後継のJPEG 2000では算術符号が採用された。

なお、周波数領域への変換前の画像フォーマットの色成分の数は1~4の間で選択でき、各色成分が何であるかを決める表色系も自由に選択することができる。そのため色成分が1つのグレースケール、色成分が3つのRGB及びYCbCr、色成分が4つのYMCKなどのデータのどれも用いることができる。しかし、表色系の規定がない上にどの表色系であるかを示す情報もないことは互換性に大きな問題となる。そのためJFIF形式では、YCbCr表色系を用いること、さらに成分の順序はY、Cb、Crの順であることを規定している。各色成分の空間的な間引きをあらわすサンプリングファクタについては、各々の色成分について水平方向、垂直方向独立に定めることができ、一般的な形式の4:4:4、4:2:2、4:2:0、4:1:1のいずれもが選択可能である。

ノイズ

JPEGではブロック単位で変換を行うため、圧縮率を上げるとブロックの境界にブロックノイズと呼ばれるノイズが生じる。

また、周波数領域への変換においては、低周波成分に画像のエネルギーが集中するため、高周波成分のエネルギーは小さくなる。このため量子化を行うと高周波成分はゼロに落ち、無くなってしまう。すると画像の急峻な変化を十分に表現できないため、エッジ周辺では、ある一点に集まる蚊にたとえモスキートノイズと呼ばれるノイズが生じる。

色差を間引く為、特に赤には弱く、赤の部分でノイズが発生しやすい。

規格書

規格は、合同のグループで作られたため国際標準化機構 (ISO)、国際電気標準会議 (IEC) と国際電気通信連合 (ITU) の双方から出されている。それにならい、日本工業規格 (JIS) でも規格化されている。

- ITU-T勧告 T.81

- ISO/IEC 10918-1:1994

- JIS X 4301

インターネットでの普及とその背景

JPEGは、Webの普及黎明期において、Webブラウザ標準の画像フォーマットとして、GIFと双璧を成していた。

JPEGの符号化方式の特性から、同じ色が広い範囲に広がることの多いCG絵画であっても、画像そのもののサイズに比例してファイルサイズが大きくなる。このため、ダイヤルアップ接続等、一般ユーザーの末端接続がナローバンドだった時代には、データ転送量を少なくするという観点から、CG絵画はGIF、デジタルスチル写真にはJPEG、という使い分けが存在していた。

1999年、GIFの特許問題によって起こったGIF排斥運動(当該項目を参照)で、GIFから、JPEGや、新たにフリーのフォーマットとして開発されたPNGに移行する流れになった。PNGは当時のブラウザではプラグインを導入しないと表示できないなどの問題を抱えているケースが多かったため、GIF画像をJPEGによって置き換えられるケースが多かった。

現在は、GIFのLZWの特許が失効し、フリーな画像フォーマットとして再び使えるようになり、PNGもほぼ全てのブラウザでサポートされるようになった。この2つの画像フォーマットは現在もインターネット上でよく使われている。

出典

- ^ http://www.nvidia.co.jp/object/3d-vision-3d-pictures-jp-old.html

関連項目

- 派生規格

-

- CPKTフォーマット

- JPEG-HDR

- Lossless JPEG

- ポストJPEG規格

-

- JPEG 2000

- JPEG XR (HD Photo)

- WebP

- データ圧縮

- JBIG

- 安田浩

- プログレッシブJPEG

- Portable Network Graphics (PNG)

- Graphics Interchange Format (GIF)

- libjpeg

外部リンク

- JPEG公式サイト(英語。日本語ページは2007年12月現在未完成)

- W3C Overview of JPEG(英語。JPEGおよびJFIFの仕様書あり)

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

wiki en

|

A photo of a cat with the compression rate decreasing, and hence quality increasing, from left to right.

|

|

| Filename extension | .jpg, .jpeg, .jpe |

|---|---|

| Internet media type | image/jpeg |

| Type code | JPEG |

| Uniform Type Identifier (UTI) | public.jpeg |

| Magic number | ff d8 ff |

| Developed by | Joint Photographic Experts Group |

| Type of format | lossy image format |

| Standard | ISO/IEC 10918, ITU-T T.81, ITU-T T.83, ITU-T T.84, ITU-T T.86 |

| Website | www |

JPEG (/ˈdʒeɪpɛɡ/ JAY-peg)[1] is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality.[citation needed]

JPEG compression is used in a number of image file formats. JPEG/Exif is the most common image format used by digital cameras and other photographic image capture devices; along with JPEG/JFIF, it is the most common format for storing and transmitting photographic images on the World Wide Web.[citation needed] These format variations are often not distinguished, and are simply called JPEG.

The term "JPEG" is an acronym for the Joint Photographic Experts Group, which created the standard. The MIME media type for JPEG is image/jpeg, except in older Internet Explorer versions, which provides a MIME type of image/pjpeg when uploading JPEG images.[2] JPEG files usually have a filename extension of .jpg or .jpeg.

JPEG/JFIF supports a maximum image size of 65535×65535 pixels,[3] hence up to 4 gigapixels (for an aspect ratio of 1:1).

Contents

- 1 The JPEG standard

- 2 Typical usage

- 3 JPEG compression

- 3.1 Lossless editing

- 4 JPEG files

- 4.1 JPEG filename extensions

- 4.2 Color profile

- 5 Syntax and structure

- 6 JPEG codec example

- 6.1 Encoding

- 6.1.1 Color space transformation

- 6.1.2 Downsampling

- 6.1.3 Block splitting

- 6.1.4 Discrete cosine transform

- 6.1.5 Quantization

- 6.1.6 Entropy coding

- 6.2 Compression ratio and artifacts

- 6.3 Decoding

- 6.4 Required precision

- 6.1 Encoding

- 7 Effects of JPEG compression

- 7.1 Sample photographs

- 8 Lossless further compression

- 9 Derived formats for stereoscopic 3D

- 9.1 JPEG Stereoscopic

- 9.2 JPEG Multi-Picture Format

- 10 Patent issues

- 11 Standards

- 12 Implementations

- 13 See also

- 14 References

- 15 External links

The JPEG standard

"JPEG" stands for Joint Photographic Experts Group, the name of the committee that created the JPEG standard and also other still picture coding standards. The "Joint" stood for ISO TC97 WG8 and CCITT SGVIII. In 1987 ISO TC 97 became ISO/IEC JTC1 and in 1992 CCITT became ITU-T. Currently on the JTC1 side JPEG is one of two sub-groups of ISO/IEC Joint Technical Committee 1, Subcommittee 29, Working Group 1 (ISO/IEC JTC 1/SC 29/WG 1) – titled as Coding of still pictures.[4][5][6] On the ITU-T side ITU-T SG16 is the respective body. The original JPEG group was organized in 1986,[7] issuing the first JPEG standard in 1992, which was approved in September 1992 as ITU-T Recommendation T.81[8] and in 1994 as ISO/IEC 10918-1.

The JPEG standard specifies the codec, which defines how an image is compressed into a stream of bytes and decompressed back into an image, but not the file format used to contain that stream.[9] The Exif and JFIF standards define the commonly used file formats for interchange of JPEG-compressed images.

JPEG standards are formally named as Information technology – Digital compression and coding of continuous-tone still images. ISO/IEC 10918 consists of the following parts:

| Part | ISO/IEC standard | ITU-T Rec. | First public release date | Latest amendment | Title | Description |

|---|---|---|---|---|---|---|

| Part 1 | ISO/IEC 10918-1:1994 | T.81 (09/92) | 000000001992-09-18-0000Sep 18, 1992 | Requirements and guidelines | ||

| Part 2 | ISO/IEC 10918-2:1995 | T.83 (11/94) | 000000001994-11-11-0000Nov 11, 1994 | Compliance testing | rules and checks for software conformance (to Part 1) | |

| Part 3 | ISO/IEC 10918-3:1997 | T.84 (07/96) | 000000001996-07-03-0000Jul 3, 1996 | 000000001999-04-01-0000Apr 1, 1999 | Extensions | set of extensions to improve the Part 1, including the SPIFF file format |

| Part 4 | ISO/IEC 10918-4:1999 | T.86 (06/98) | 000000001998-06-18-0000Jun 18, 1998 | 000000002012-06-29-0000Jun 29, 2012 | Registration of JPEG profiles, SPIFF profiles, SPIFF tags, SPIFF colour spaces, APPn markers, SPIFF compression types and Registration Authorities (REGAUT) | methods for registering some of the parameters used to extend JPEG |

| Part 5 | ISO/IEC 10918-5:2013 | T.871 (05/11) | 000000002011-05-14-0000May 14, 2011 | JPEG File Interchange Format (JFIF) | A popular format which has been the de facto file format for images encoded by the JPEG standard. In 2009, the JPEG Committee formally established an Ad Hoc Group to standardize JFIF as JPEG Part 5.[11] | |

| Part 6 | ISO/IEC 10918-6:2013 | T.872 (06/12) | 000000002012-06-01-0000Jun 2012 | Application to printing systems | Specifies a subset of features and application tools for the interchange of images encoded according to the ISO/IEC 10918-1 for printing. |

Ecma International TR/98 specifies the JPEG File Interchange Format (JFIF); the first edition was published in June 2009.[12]

Typical usage

The JPEG compression algorithm is at its best on photographs and paintings of realistic scenes with smooth variations of tone and color. For web usage, where the amount of data used for an image is important, JPEG is very popular. JPEG/Exif is also the most common format saved by digital cameras.

On the other hand, JPEG may not be as well suited for line drawings and other textual or iconic graphics, where the sharp contrasts between adjacent pixels can cause noticeable artifacts. Such images may be better saved in a lossless graphics format such as TIFF, GIF, PNG, or a raw image format. The JPEG standard actually includes a lossless coding mode, but that mode is not supported in most products.

As the typical use of JPEG is a lossy compression method, which somewhat reduces the image fidelity, it should not be used in scenarios where the exact reproduction of the data is required (such as some scientific and medical imaging applications and certain technical image processing work).

JPEG is also not well suited to files that will undergo multiple edits, as some image quality will usually be lost each time the image is decompressed and recompressed, particularly if the image is cropped or shifted, or if encoding parameters are changed – see digital generation loss for details. To avoid this, an image that is being modified or may be modified in the future can be saved in a lossless format, with a copy exported as JPEG for distribution.

JPEG compression

JPEG uses a lossy form of compression based on the discrete cosine transform (DCT). This mathematical operation converts each frame/field of the video source from the spatial (2D) domain into the frequency domain (a.k.a. transform domain.) A perceptual model based loosely on the human psychovisual system discards high-frequency information, i.e. sharp transitions in intensity, and color hue. In the transform domain, the process of reducing information is called quantization. In simpler terms, quantization is a method for optimally reducing a large number scale (with different occurrences of each number) into a smaller one, and the transform-domain is a convenient representation of the image because the high-frequency coefficients, which contribute less to the overall picture than other coefficients, are characteristically small-values with high compressibility. The quantized coefficients are then sequenced and losslessly packed into the output bitstream. Nearly all software implementations of JPEG permit user control over the compression-ratio (as well as other optional parameters), allowing the user to trade off picture-quality for smaller file size. In embedded applications (such as miniDV, which uses a similar DCT-compression scheme), the parameters are pre-selected and fixed for the application.

The compression method is usually lossy, meaning that some original image information is lost and cannot be restored, possibly affecting image quality. There is an optional lossless mode defined in the JPEG standard. However, this mode is not widely supported in products.

There is also an interlaced "Progressive JPEG" format, in which data is compressed in multiple passes of progressively higher detail. This is ideal for large images that will be displayed while downloading over a slow connection, allowing a reasonable preview after receiving only a portion of the data. However, support for progressive JPEGs is not universal. When progressive JPEGs are received by programs that do not support them (such as versions of Internet Explorer before Windows 7)[13] the software displays the image only after it has been completely downloaded.

There are also many medical imaging and traffic systems that create and process 12-bit JPEG images, normally grayscale images. The 12-bit JPEG format has been part of the JPEG specification for some time, but this format is not as widely supported.

Lossless editing

A number of alterations to a JPEG image can be performed losslessly (that is, without recompression and the associated quality loss) as long as the image size is a multiple of 1 MCU block (Minimum Coded Unit) (usually 16 pixels in both directions, for 4:2:0 chroma subsampling). Utilities that implement this include jpegtran, with user interface Jpegcrop, and the JPG_TRANSFORM plugin to IrfanView.

Blocks can be rotated in 90 degree increments, flipped in the horizontal, vertical and diagonal axes and moved about in the image. Not all blocks from the original image need to be used in the modified one.

The top and left edge of a JPEG image must lie on an 8 × 8 pixel block boundary, but the bottom and right edge need not do so. This limits the possible lossless crop operations, and also prevents flips and rotations of an image whose bottom or right edge does not lie on a block boundary for all channels (because the edge would end up on top or left, where – as aforementioned – a block boundary is obligatory).

When using lossless cropping, if the bottom or right side of the crop region is not on a block boundary then the rest of the data from the partially used blocks will still be present in the cropped file and can be recovered. It is also possible to transform between baseline and progressive formats without any loss of quality, since the only difference is the order in which the coefficients are placed in the file.

Furthermore, several JPEG images can be losslessly joined together, as long as they were saved with the same quality and the edges coincide with block boundaries.

JPEG files

The file format known as "JPEG Interchange Format" (JIF) is specified in Annex B of the standard. However, this "pure" file format is rarely used, primarily because of the difficulty of programming encoders and decoders that fully implement all aspects of the standard and because of certain shortcomings of the standard:

- Color space definition

- Component sub-sampling registration

- Pixel aspect ratio definition.

Several additional standards have evolved to address these issues. The first of these, released in 1992, was JPEG File Interchange Format (or JFIF), followed in recent years by Exchangeable image file format (Exif) and ICC color profiles. Both of these formats use the actual JIF byte layout, consisting of different markers, but in addition employ one of the JIF standard's extension points, namely the application markers: JFIF use APP0, while Exif use APP1. Within these segments of the file, that were left for future use in the JIF standard and aren't read by it, these standards add specific metadata.

Thus, in some ways JFIF is a cutdown version of the JIF standard in that it specifies certain constraints (such as not allowing all the different encoding modes), while in other ways it is an extension of JIF due to the added metadata. The documentation for the original JFIF standard states:[14]

- JPEG File Interchange Format is a minimal file format which enables JPEG bitstreams to be exchanged between a wide variety of platforms and applications. This minimal format does not include any of the advanced features found in the TIFF JPEG specification or any application specific file format. Nor should it, for the only purpose of this simplified format is to allow the exchange of JPEG compressed images.

Image files that employ JPEG compression are commonly called "JPEG files", and are stored in variants of the JIF image format. Most image capture devices (such as digital cameras) that output JPEG are actually creating files in the Exif format, the format that the camera industry has standardized on for metadata interchange. On the other hand, since the Exif standard does not allow color profiles, most image editing software stores JPEG in JFIF format, and also include the APP1 segment from the Exif file to include the metadata in an almost-compliant way; the JFIF standard is interpreted somewhat flexibly.[15]

Strictly speaking, the JFIF and Exif standards are incompatible because they each specify that their marker segment (APP0 or APP1, respectively) appears first. In practice, most JPEG files contain a JFIF marker segment that precedes the Exif header. This allows older readers to correctly handle the older format JFIF segment, while newer readers also decode the following Exif segment, being less strict about requiring it to appear first.

JPEG filename extensions

The most common filename extensions for files employing JPEG compression are .jpg and .jpeg, though .jpe, .jfif and .jif are also used. It is also possible for JPEG data to be embedded in other file types – TIFF encoded files often embed a JPEG image as a thumbnail of the main image; and MP3 files can contain a JPEG of cover art, in the ID3v2 tag.

Color profile

Many JPEG files embed an ICC color profile (color space). Commonly used color profiles include sRGB and Adobe RGB. Because these color spaces use a non-linear transformation, the dynamic range of an 8-bit JPEG file is about 11 stops; see gamma curve.

Syntax and structure

A JPEG image consists of a sequence of segments, each beginning with a marker, each of which begins with a 0xFF byte followed by a byte indicating what kind of marker it is. Some markers consist of just those two bytes; others are followed by two bytes indicating the length of marker-specific payload data that follows. (The length includes the two bytes for the length, but not the two bytes for the marker.) Some markers are followed by entropy-coded data; the length of such a marker does not include the entropy-coded data. Note that consecutive 0xFF bytes are used as fill bytes for padding purposes, although this fill byte padding should only ever take place for markers immediately following entropy-coded scan data (see JPEG specification section B.1.1.2 and E.1.2 for details; specifically "In all cases where markers are appended after the compressed data, optional 0xFF fill bytes may precede the marker").

Within the entropy-coded data, after any 0xFF byte, a 0x00 byte is inserted by the encoder before the next byte, so that there does not appear to be a marker where none is intended, preventing framing errors. Decoders must skip this 0x00 byte. This technique, called byte stuffing (see JPEG specification section F.1.2.3), is only applied to the entropy-coded data, not to marker payload data. Note however that entropy-coded data has a few markers of its own; specifically the Reset markers (0xD0 through 0xD7), which are used to isolate independent chunks of entropy-coded data to allow parallel decoding, and encoders are free to insert these Reset markers at regular intervals (although not all encoders do this).

| Short name | Bytes | Payload | Name | Comments |

|---|---|---|---|---|

| SOI | 0xFF, 0xD8 | none | Start Of Image | |

| SOF0 | 0xFF, 0xC0 | variable size | Start Of Frame (Baseline DCT) | Indicates that this is a baseline DCT-based JPEG, and specifies the width, height, number of components, and component subsampling (e.g., 4:2:0). |

| SOF2 | 0xFF, 0xC2 | variable size | Start Of Frame (Progressive DCT) | Indicates that this is a progressive DCT-based JPEG, and specifies the width, height, number of components, and component subsampling (e.g., 4:2:0). |

| DHT | 0xFF, 0xC4 | variable size | Define Huffman Table(s) | Specifies one or more Huffman tables. |

| DQT | 0xFF, 0xDB | variable size | Define Quantization Table(s) | Specifies one or more quantization tables. |

| DRI | 0xFF, 0xDD | 4 bytes | Define Restart Interval | Specifies the interval between RSTn markers, in macroblocks. This marker is followed by two bytes indicating the fixed size so it can be treated like any other variable size segment. |

| SOS | 0xFF, 0xDA | variable size | Start Of Scan | Begins a top-to-bottom scan of the image. In baseline DCT JPEG images, there is generally a single scan. Progressive DCT JPEG images usually contain multiple scans. This marker specifies which slice of data it will contain, and is immediately followed by entropy-coded data. |

| RSTn | 0xFF, 0xDn (n=0..7) | none | Restart | Inserted every r macroblocks, where r is the restart interval set by a DRI marker. Not used if there was no DRI marker. The low 3 bits of the marker code cycle in value from 0 to 7. |

| APPn | 0xFF, 0xEn | variable size | Application-specific | For example, an Exif JPEG file uses an APP1 marker to store metadata, laid out in a structure based closely on TIFF. |

| COM | 0xFF, 0xFE | variable size | Comment | Contains a text comment. |

| EOI | 0xFF, 0xD9 | none | End Of Image |

There are other Start Of Frame markers that introduce other kinds of JPEG encodings.

Since several vendors might use the same APPn marker type, application-specific markers often begin with a standard or vendor name (e.g., "Exif" or "Adobe") or some other identifying string.

At a restart marker, block-to-block predictor variables are reset, and the bitstream is synchronized to a byte boundary. Restart markers provide means for recovery after bitstream error, such as transmission over an unreliable network or file corruption. Since the runs of macroblocks between restart markers may be independently decoded, these runs may be decoded in parallel.

JPEG codec example

Although a JPEG file can be encoded in various ways, most commonly it is done with JFIF encoding. The encoding process consists of several steps:

- The representation of the colors in the image is converted from RGB to Y′CBCR, consisting of one luma component (Y'), representing brightness, and two chroma components, (CB and CR), representing color. This step is sometimes skipped.

- The resolution of the chroma data is reduced, usually by a factor of 2 or 3. This reflects the fact that the eye is less sensitive to fine color details than to fine brightness details.

- The image is split into blocks of 8×8 pixels, and for each block, each of the Y, CB, and CR data undergoes the Discrete Cosine Transform (DCT), which was developed in 1974 by N. Ahmed, T. Natarajan and K. R. Rao; see Citation 1 in Discrete cosine transform. A DCT is similar to a Fourier transform in the sense that it produces a kind of spatial frequency spectrum.

- The amplitudes of the frequency components are quantized. Human vision is much more sensitive to small variations in color or brightness over large areas than to the strength of high-frequency brightness variations. Therefore, the magnitudes of the high-frequency components are stored with a lower accuracy than the low-frequency components. The quality setting of the encoder (for example 50 or 95 on a scale of 0–100 in the Independent JPEG Group's library[17]) affects to what extent the resolution of each frequency component is reduced. If an excessively low quality setting is used, the high-frequency components are discarded altogether.

- The resulting data for all 8×8 blocks is further compressed with a lossless algorithm, a variant of Huffman encoding.

The decoding process reverses these steps, except the quantization because it is irreversible. In the remainder of this section, the encoding and decoding processes are described in more detail.

Encoding

Many of the options in the JPEG standard are not commonly used, and as mentioned above, most image software uses the simpler JFIF format when creating a JPEG file, which among other things specifies the encoding method. Here is a brief description of one of the more common methods of encoding when applied to an input that has 24 bits per pixel (eight each of red, green, and blue). This particular option is a lossy data compression method.

Color space transformation

First, the image should be converted from RGB into a different color space called Y′CBCR (or, informally, YCbCr). It has three components Y', CB and CR: the Y' component represents the brightness of a pixel, and the CB and CR components represent the chrominance (split into blue and red components). This is basically the same color space as used by digital color television as well as digital video including video DVDs, and is similar to the way color is represented in analog PAL video and MAC (but not by analog NTSC, which uses the YIQ color space). The Y′CBCR color space conversion allows greater compression without a significant effect on perceptual image quality (or greater perceptual image quality for the same compression). The compression is more efficient because the brightness information, which is more important to the eventual perceptual quality of the image, is confined to a single channel. This more closely corresponds to the perception of color in the human visual system. The color transformation also improves compression by statistical decorrelation.

A particular conversion to Y′CBCR is specified in the JFIF standard, and should be performed for the resulting JPEG file to have maximum compatibility. However, some JPEG implementations in "highest quality" mode do not apply this step and instead keep the color information in the RGB color model,[citation needed] where the image is stored in separate channels for red, green and blue brightness components. This results in less efficient compression, and would not likely be used when file size is especially important.

Downsampling

Due to the densities of color- and brightness-sensitive receptors in the human eye, humans can see considerably more fine detail in the brightness of an image (the Y' component) than in the hue and color saturation of an image (the Cb and Cr components). Using this knowledge, encoders can be designed to compress images more efficiently.

The transformation into the Y′CBCR color model enables the next usual step, which is to reduce the spatial resolution of the Cb and Cr components (called "downsampling" or "chroma subsampling"). The ratios at which the downsampling is ordinarily done for JPEG images are 4:4:4 (no downsampling), 4:2:2 (reduction by a factor of 2 in the horizontal direction), or (most commonly) 4:2:0 (reduction by a factor of 2 in both the horizontal and vertical directions). For the rest of the compression process, Y', Cb and Cr are processed separately and in a very similar manner.

Block splitting

After subsampling, each channel must be split into 8×8 blocks. Depending on chroma subsampling, this yields (Minimum Coded Unit) MCU blocks of size 8×8 (4:4:4 – no subsampling), 16×8 (4:2:2), or most commonly 16×16 (4:2:0). In video compression MCUs are called macroblocks.

If the data for a channel does not represent an integer number of blocks then the encoder must fill the remaining area of the incomplete blocks with some form of dummy data. Filling the edges with a fixed color (for example, black) can create ringing artifacts along the visible part of the border;[citation needed] repeating the edge pixels is a common technique that reduces (but does not necessarily completely eliminate) such artifacts,[citation needed] and more sophisticated border filling techniques can also be applied[specify].

Discrete cosine transform

Next, each 8×8 block of each component (Y, Cb, Cr) is converted to a frequency-domain representation, using a normalized, two-dimensional type-II discrete cosine transform (DCT), which was introduced by N. Ahmed, T. Natarajan and K. R. Rao in 1974; see Citation 1 in Discrete cosine transform. The DCT is sometimes referred to as "type-II DCT" in the context of a family of transforms as in discrete cosine transform, and the corresponding inverse (IDCT) is denoted as "type-III DCT".

As an example, one such 8×8 8-bit subimage might be:

Before computing the DCT of the 8×8 block, its values are shifted from a positive range to one centered around zero. For an 8-bit image, each entry in the original block falls in the range . The midpoint of the range (in this case, the value 128) is subtracted from each entry to produce a data range that is centered around zero, so that the modified range is . This step reduces the dynamic range requirements in the DCT processing stage that follows. (Aside from the difference in dynamic range within the DCT stage, this step is mathematically equivalent to subtracting 1024 from the DC coefficient after performing the transform – which may be a better way to perform the operation on some architectures since it involves performing only one subtraction rather than 64 of them.)

This step results in the following values:

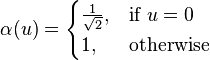

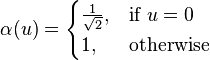

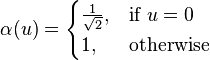

The next step is to take the two-dimensional DCT, which is given by:

where

- is the horizontal spatial frequency, for the integers .

- is the vertical spatial frequency, for the integers .

is a normalizing scale factor to make the transformation orthonormal

is a normalizing scale factor to make the transformation orthonormal- is the pixel value at coordinates

- is the DCT coefficient at coordinates

If we perform this transformation on our matrix above, we get the following (rounded to the nearest two digits beyond the decimal point):

Note the top-left corner entry with the rather large magnitude. This is the DC coefficient. DC stands for direct current.[citation needed] It will define the basic hue for the whole block. The DC may also be called constant component. The remaining 63 coefficients are called the AC coefficients, where AC may stand for alternating components.[18] The advantage of the DCT is its tendency to aggregate most of the signal in one corner of the result, as may be seen above. The quantization step to follow accentuates this effect while simultaneously reducing the overall size of the DCT coefficients, resulting in a signal that is easy to compress efficiently in the entropy stage.

The DCT temporarily increases the bit-depth of the data, since the DCT coefficients of an 8-bit/component image take up to 11 or more bits (depending on fidelity of the DCT calculation) to store. This may force the codec to temporarily use 16-bit bins to hold these coefficients, doubling the size of the image representation at this point; they are typically reduced back to 8-bit values by the quantization step. The temporary increase in size at this stage is not a performance concern for most JPEG implementations, because typically only a very small part of the image is stored in full DCT form at any given time during the image encoding or decoding process.

Quantization

The human eye is good at seeing small differences in brightness over a relatively large area, but not so good at distinguishing the exact strength of a high frequency brightness variation. This allows one to greatly reduce the amount of information in the high frequency components. This is done by simply dividing each component in the frequency domain by a constant for that component, and then rounding to the nearest integer. This rounding operation is the only lossy operation in the whole process (other than chroma subsampling) if the DCT computation is performed with sufficiently high precision. As a result of this, it is typically the case that many of the higher frequency components are rounded to zero, and many of the rest become small positive or negative numbers, which take many fewer bits to represent.

The elements in the quantization matrix control the compression ratio, with larger values producing greater compression. A typical quantization matrix (for a quality of 50% as specified in the original JPEG Standard), is as follows:

The quantized DCT coefficients are computed with

where is the unquantized DCT coefficients; is the quantization matrix above; and is the quantized DCT coefficients.

Using this quantization matrix with the DCT coefficient matrix from above results in:

For example, using −415 (the DC coefficient) and rounding to the nearest integer

Entropy coding

Entropy coding is a special form of lossless data compression. It involves arranging the image components in a "zigzag" order employing run-length encoding (RLE) algorithm that groups similar frequencies together, inserting length coding zeros, and then using Huffman coding on what is left.

The JPEG standard also allows, but does not require, decoders to support the use of arithmetic coding, which is mathematically superior to Huffman coding. However, this feature has rarely been used as it was historically covered by patents requiring royalty-bearing licenses, and because it is slower to encode and decode compared to Huffman coding. Arithmetic coding typically makes files about 5–7% smaller.

The previous quantized DC coefficient is used to predict the current quantized DC coefficient. The difference between the two is encoded rather than the actual value. The encoding of the 63 quantized AC coefficients does not use such prediction differencing.

The zigzag sequence for the above quantized coefficients are shown below. (The format shown is just for ease of understanding/viewing.)

| −26 | |||||||

| −3 | 0 | ||||||

| −3 | −2 | −6 | |||||

| 2 | −4 | 1 | −3 | ||||

| 1 | 1 | 5 | 1 | 2 | |||

| −1 | 1 | −1 | 2 | 0 | 0 | ||

| 0 | 0 | 0 | -1 | -1 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | ||

| 0 | 0 | 0 | 0 | 0 | |||

| 0 | 0 | 0 | 0 | ||||

| 0 | 0 | 0 | |||||

| 0 | 0 | ||||||

| 0 |

If the i-th block is represented by and positions within each block are represented by where and , then any coefficient in the DCT image can be represented as . Thus, in the above scheme, the order of encoding pixels (for the -th block) is , , , , , , , and so on.

This encoding mode is called baseline sequential encoding. Baseline JPEG also supports progressive encoding. While sequential encoding encodes coefficients of a single block at a time (in a zigzag manner), progressive encoding encodes similar-positioned coefficients of all blocks in one go, followed by the next positioned coefficients of all blocks, and so on. So, if the image is divided into N 8×8 blocks , then progressive encoding encodes for all blocks, i.e., for all . This is followed by encoding coefficient of all blocks, followed by -th coefficient of all blocks, then -th coefficient of all blocks, and so on.

It should be noted here that once all similar-positioned coefficients have been encoded, the next position to be encoded is the one occurring next in the zigzag traversal as indicated in the figure above. It has been found that Baseline Progressive JPEG encoding usually gives better compression as compared to Baseline Sequential JPEG due to the ability to use different Huffman tables (see below) tailored for different frequencies on each "scan" or "pass" (which includes similar-positioned coefficients), though the difference is not too large.

In the rest of the article, it is assumed that the coefficient pattern generated is due to sequential mode.

In order to encode the above generated coefficient pattern, JPEG uses Huffman encoding. The JPEG standard provides general-purpose Huffman tables; encoders may also choose to generate Huffman tables optimized for the actual frequency distributions in images being encoded.

The process of encoding the zig-zag quantized data begins with a run-length encoding explained below, where

- is the non-zero, quantized AC coefficient.

- RUNLENGTH is the number of zeroes that came before this non-zero AC coefficient.

- SIZE is the number of bits required to represent .

- AMPLITUDE is the bit-representation of .

The run-length encoding works by examining each non-zero AC coefficient and determining how many zeroes came before the previous AC coefficient. With this information, two symbols are created:

| Symbol 1 | Symbol 2 |

| (RUNLENGTH, SIZE) | (AMPLITUDE) |

Both RUNLENGTH and SIZE rest on the same byte, meaning that each only contains 4 bits of information. The higher bits deal with the number of zeroes, while the lower bits denote the number of bits necessary to encode the value of .

This has the immediate implication of Symbol 1 being only able store information regarding the first 15 zeroes preceding the non-zero AC coefficient. However, JPEG defines two special Huffman code words. One is for ending the sequence prematurely when the remaining coefficients are zero (called "End-of-Block" or "EOB"), and another when the run of zeroes goes beyond 15 before reaching a non-zero AC coefficient. In such a case where 16 zeroes are encountered before a given non-zero AC coefficient, Symbol 1 is encoded "specially" as: (15, 0)(0).

The overall process continues until "EOB" - denoted by (0, 0) - is reached.

With this in mind, the sequence from earlier becomes:

(0, 2)(-3); (1, 2)(-3); (0, 2)(-2); (0, 3)(-6); (0, 2)(2); (0, 3)(-4); (0, 1)(1); (0, 2)(-3); (0, 1)(1);

(0, 1)(1); (0, 3)(5); (0, 1)(1); (0, 2)(2); (0, 1)(-1); (0, 1)(1); (0, 1)(-1); (0, 2)(2); (5, 1)(-1);

(0, 1)(-1); (0, 0).

(The first value in the matrix, -26, is the DC coefficient; it is not encoded the same way. See above.)

From here, frequency calculations are made based on occurrences of the coefficients. In our example block, most of the quantized coefficients are small numbers that are not preceded immediately by a zero coefficient. These more-frequent cases will be represented by shorter code words.

Compression ratio and artifacts

The resulting compression ratio can be varied according to need by being more or less aggressive in the divisors used in the quantization phase. Ten to one compression usually results in an image that cannot be distinguished by eye from the original. 100 to one compression is usually possible, but will look distinctly artifacted compared to the original. The appropriate level of compression depends on the use to which the image will be put.

| Illustration of edge busyness.[19] |

Those who use the World Wide Web may be familiar with the irregularities known as compression artifacts that appear in JPEG images, which may take the form of noise around contrasting edges (especially curves and corners), or 'blocky' images. These are due to the quantization step of the JPEG algorithm. They are especially noticeable around sharp corners between contrasting colors (text is a good example as it contains many such corners). The analogous artifacts in MPEG video are referred to as mosquito noise, as the resulting "edge busyness" and spurious dots, which change over time, resemble mosquitoes swarming around the object.[19][20]

These artifacts can be reduced by choosing a lower level of compression; they may be eliminated by saving an image using a lossless file format, though for photographic images this will usually result in a larger file size. The images created with ray-tracing programs have noticeable blocky shapes on the terrain. Certain low-intensity compression artifacts might be acceptable when simply viewing the images, but can be emphasized if the image is subsequently processed, usually resulting in unacceptable quality. Consider the example below, demonstrating the effect of lossy compression on an edge detection processing step.

| Image | Lossless compression | Lossy compression |

|---|---|---|

| Original | ||

| Processed by Canny edge detector |

Some programs allow the user to vary the amount by which individual blocks are compressed. Stronger compression is applied to areas of the image that show fewer artifacts. This way it is possible to manually reduce JPEG file size with less loss of quality.

Since the quantization stage always results in a loss of information, JPEG standard is always a lossy compression codec. (Information is lost both in quantizing and rounding of the floating-point numbers.) Even if the quantization matrix is a matrix of ones, information will still be lost in the rounding step.

Decoding

Decoding to display the image consists of doing all the above in reverse.

Taking the DCT coefficient matrix (after adding the difference of the DC coefficient back in)

and taking the entry-for-entry product with the quantization matrix from above results in

which closely resembles the original DCT coefficient matrix for the top-left portion.

The next step is to take the two-dimensional inverse DCT (a 2D type-III DCT), which is given by:

![f_{x,y} =

\frac{1}{4}

\sum_{u=0}^7

\sum_{v=0}^7

\alpha(u) \alpha(v) F_{u,v}

\cos \left[\frac{(2x+1)u\pi}{16} \right]

\cos \left[\frac{(2y+1)v\pi}{16} \right]](http://upload.wikimedia.org/math/1/c/5/1c5f9cd3d36567c0796d12ce725d4083.png)

where

- is the pixel row, for the integers .

- is the pixel column, for the integers .

- is defined as above, for the integers .

- is the reconstructed approximate coefficient at coordinates

- is the reconstructed pixel value at coordinates

Rounding the output to integer values (since the original had integer values) results in an image with values (still shifted down by 128)

and adding 128 to each entry

This is the decompressed subimage. In general, the decompression process may produce values outside the original input range of . If this occurs, the decoder needs to clip the output values keep them within that range to prevent overflow when storing the decompressed image with the original bit depth.

The decompressed subimage can be compared to the original subimage (also see images to the right) by taking the difference (original − uncompressed) results in the following error values:

with an average absolute error of about 5 values per pixels (i.e., ).

The error is most noticeable in the bottom-left corner where the bottom-left pixel becomes darker than the pixel to its immediate right.

Required precision

The encoding description in the JPEG standard does not fix the precision needed for the output compressed image. However, the JPEG standard (and the similar MPEG standards) includes some precision requirements for the decoding, including all parts of the decoding process (variable length decoding, inverse DCT, dequantization, renormalization of outputs); the output from the reference algorithm must not exceed:

- a maximum 1 bit of difference for each pixel component

- low mean square error over each 8×8-pixel block

- very low mean error over each 8×8-pixel block

- very low mean square error over the whole image

- extremely low mean error over the whole image

These assertions are tested on a large set of randomized input images, to handle the worst cases. The former IEEE 1180–1990 standard contained some similar precision requirements. The precision has a consequence on the implementation of decoders, and it is critical because some encoding processes (notably used for encoding sequences of images like MPEG) need to be able to construct, on the encoder side, a reference decoded image. In order to support 8-bit precision per pixel component output, dequantization and inverse DCT transforms are typically implemented with at least 14-bit precision in optimized decoders.

Effects of JPEG compression

JPEG compression artifacts blend well into photographs with detailed non-uniform textures, allowing higher compression ratios. Notice how a higher compression ratio first affects the high-frequency textures in the upper-left corner of the image, and how the contrasting lines become more fuzzy. The very high compression ratio severely affects the quality of the image, although the overall colors and image form are still recognizable. However, the precision of colors suffer less (for a human eye) than the precision of contours (based on luminance). This justifies the fact that images should be first transformed in a color model separating the luminance from the chromatic information, before subsampling the chromatic planes (which may also use lower quality quantization) in order to preserve the precision of the luminance plane with more information bits.

Sample photographs

For information, the uncompressed 24-bit RGB bitmap image below (73,242 pixels) would require 219,726 bytes (excluding all other information headers). The filesizes indicated below include the internal JPEG information headers and some meta-data. For highest quality images (Q=100), about 8.25 bits per color pixel is required. On grayscale images, a minimum of 6.5 bits per pixel is enough (a comparable Q=100 quality color information requires about 25% more encoded bits). The highest quality image below (Q=100) is encoded at 9 bits per color pixel, the medium quality image (Q=25) uses 1 bit per color pixel. For most applications, the quality factor should not go below 0.75 bit per pixel (Q=12.5), as demonstrated by the low quality image. The image at lowest quality uses only 0.13 bit per pixel, and displays very poor color. This is useful when the image will be displayed in a significantly scaled down size.

-

-

Note: The above images are not IEEE / CCIR / EBU test images, and the encoder settings are not specified or available. Image Quality Size (bytes) Compression ratio Comment Highest quality (Q = 100) 83,261 2.6:1 Extremely minor artifacts High quality (Q = 50) 15,138 15:1 Initial signs of subimage artifacts Medium quality (Q = 25) 9,553 23:1 Stronger artifacts; loss of high frequency information Low quality (Q = 10) 4,787 46:1 Severe high frequency loss; artifacts on subimage boundaries ("macroblocking") are obvious Lowest quality (Q = 1) 1,523 144:1 Extreme loss of color and detail; the leaves are nearly unrecognizable

-

The medium quality photo uses only 4.3% of the storage space required for the uncompressed image, but has little noticeable loss of detail or visible artifacts. However, once a certain threshold of compression is passed, compressed images show increasingly visible defects. See the article on rate–distortion theory for a mathematical explanation of this threshold effect. A particular limitation of JPEG in this regard is its non-overlapped 8×8 block transform structure. More modern designs such as JPEG 2000 and JPEG XR exhibit a more graceful degradation of quality as the bit usage decreases – by using transforms with a larger spatial extent for the lower frequency coefficients and by using overlapping transform basis functions.

Lossless further compression

From 2004 to 2008 new research emerged on ways to further compress the data contained in JPEG images without modifying the represented image.[21][22][23][24] This has applications in scenarios where the original image is only available in JPEG format, and its size needs to be reduced for archival or transmission. Standard general-purpose compression tools cannot significantly compress JPEG files.

Typically, such schemes take advantage of improvements to the naive scheme for coding DCT coefficients, which fails to take into account:

- Correlations between magnitudes of adjacent coefficients in the same block;

- Correlations between magnitudes of the same coefficient in adjacent blocks;

- Correlations between magnitudes of the same coefficient/block in different channels;

- The DC coefficients when taken together resemble a downscale version of the original image multiplied by a scaling factor. Well-known schemes for lossless coding of continuous-tone images can be applied, achieving somewhat better compression than the Huffman coded DPCM used in JPEG.

Some standard but rarely used options already exist in JPEG to improve the efficiency of coding DCT coefficients: the arithmetic coding option, and the progressive coding option (which produces lower bitrates because values for each coefficient are coded independently, and each coefficient has a significantly different distribution). Modern methods have improved on these techniques by reordering coefficients to group coefficients of larger magnitude together;[21] using adjacent coefficients and blocks to predict new coefficient values;[23] dividing blocks or coefficients up among a small number of independently coded models based on their statistics and adjacent values;[22][23] and most recently, by decoding blocks, predicting subsequent blocks in the spatial domain, and then encoding these to generate predictions for DCT coefficients.[24]

Typically, such methods can compress existing JPEG files between 15 and 25 percent, and for JPEGs compressed at low-quality settings, can produce improvements of up to 65%.[23][24]

A freely available tool called packJPG[25] is based on the 2007 paper "Improved Redundancy Reduction for JPEG Files."

Derived formats for stereoscopic 3D

JPEG Stereoscopic

JPEG Stereoscopic (JPS, extension .jps) is a JPEG-based format for stereoscopic images.[26][27] It has a range of configurations stored in the JPEG APP3 marker field, but usually contains one image of double width, representing two images of identical size in cross-eyed (i.e. left frame on the right half of the image and vice versa) side-by-side arrangement. This file format can be viewed as a JPEG without any special software, or can be processed for rendering in other modes.

JPEG Multi-Picture Format

JPEG Multi-Picture Format (MPO, extension .mpo) is a JPEG-based format for multi-view images. It contains two or more JPEG files concatenated together.[28][29] There are also special EXIF fields describing its purpose. This is used by the Fujifilm FinePix Real 3D W1 camera, Panasonic Lumix DMC-TZ20, DMC-TZ30, DMC-TZ60& DMC-TS4 (FT4), Sony DSC-HX7V, HTC Evo 3D, the JVC GY-HMZ1U AVCHD/MVC extension camcorder and by the Nintendo 3DS for its 3D Camera. In the last few years, due to the growing use of stereoscopic images, much effort has been spent by the scientific community to develop algorithms for stereoscopic image compression.[30][31]

Patent issues

In 2002, Forgent Networks asserted that it owned and would enforce patent rights on the JPEG technology, arising from a patent that had been filed on October 27, 1986, and granted on October 6, 1987 (U.S. Patent 4,698,672). The announcement created a furor reminiscent of Unisys' attempts to assert its rights over the GIF image compression standard.

The JPEG committee investigated the patent claims in 2002 and were of the opinion that they were invalidated by prior art.[32] Others also concluded that Forgent did not have a patent that covered JPEG.[33] Nevertheless, between 2002 and 2004 Forgent was able to obtain about US$105 million by licensing their patent to some 30 companies. In April 2004, Forgent sued 31 other companies to enforce further license payments. In July of the same year, a consortium of 21 large computer companies filed a countersuit, with the goal of invalidating the patent. In addition, Microsoft launched a separate lawsuit against Forgent in April 2005.[34] In February 2006, the United States Patent and Trademark Office agreed to re-examine Forgent's JPEG patent at the request of the Public Patent Foundation.[35] On May 26, 2006 the USPTO found the patent invalid based on prior art. The USPTO also found that Forgent knew about the prior art, and did not tell the Patent Office, making any appeal to reinstate the patent highly unlikely to succeed.[36]

Forgent also possesses a similar patent granted by the European Patent Office in 1994, though it is unclear how enforceable it is.[37]

As of October 27, 2006, the U.S. patent's 20-year term appears to have expired, and in November 2006, Forgent agreed to abandon enforcement of patent claims against use of the JPEG standard.[38]

The JPEG committee has as one of its explicit goals that their standards (in particular their baseline methods) be implementable without payment of license fees, and they have secured appropriate license rights for their JPEG 2000 standard from over 20 large organizations.

Beginning in August 2007, another company, Global Patent Holdings, LLC claimed that its patent (U.S. Patent 5,253,341) issued in 1993, is infringed by the downloading of JPEG images on either a website or through e-mail. If not invalidated, this patent could apply to any website that displays JPEG images. The patent emerged[clarification needed] in July 2007 following a seven-year reexamination by the U.S. Patent and Trademark Office in which all of the original claims of the patent were revoked, but an additional claim (claim 17) was confirmed.[39]

In its first two lawsuits following the reexamination, both filed in Chicago, Illinois, Global Patent Holdings sued the Green Bay Packers, CDW, Motorola, Apple, Orbitz, Officemax, Caterpillar, Kraft and Peapod as defendants. A third lawsuit was filed on December 5, 2007 in South Florida against ADT Security Services, AutoNation, Florida Crystals Corp., HearUSA, MovieTickets.com, Ocwen Financial Corp. and Tire Kingdom, and a fourth lawsuit on January 8, 2008 in South Florida against the Boca Raton Resort & Club. A fifth lawsuit was filed against Global Patent Holdings in Nevada. That lawsuit was filed by Zappos.com, Inc., which was allegedly threatened by Global Patent Holdings, and seeks a judicial declaration that the '341 patent is invalid and not infringed.

Global Patent Holdings had also used the '341 patent to sue or threaten outspoken critics of broad software patents, including Gregory Aharonian[40] and the anonymous operator of a website blog known as the "Patent Troll Tracker."[41] On December 21, 2007, patent lawyer Vernon Francissen of Chicago asked the U.S. Patent and Trademark Office to reexamine the sole remaining claim of the '341 patent on the basis of new prior art.[42]

On March 5, 2008, the U.S. Patent and Trademark Office agreed to reexamine the '341 patent, finding that the new prior art raised substantial new questions regarding the patent's validity.[43] In light of the reexamination, the accused infringers in four of the five pending lawsuits have filed motions to suspend (stay) their cases until completion of the U.S. Patent and Trademark Office's review of the '341 patent. On April 23, 2008, a judge presiding over the two lawsuits in Chicago, Illinois granted the motions in those cases.[44] On July 22, 2008, the Patent Office issued the first "Office Action" of the second reexamination, finding the claim invalid based on nineteen separate grounds.[45] On Nov. 24, 2009, a Reexamination Certificate was issued cancelling all claims.

Beginning in 2011 and continuing as of early 2013, an entity known as Princeton Digital Image Corporation,[46] based in Eastern Texas, began suing large numbers of companies for alleged infringement of U.S. Patent No. 4,813,056 (U.S. Patent 4,813,056). Princeton claims that the JPEG image compression standard infringes the '056 patent and has sued large numbers of websites, retailers, camera and device manufacturers and resellers. The patent was originally owned and assigned to General Electric. The patent expired in December 2007, but Princeton has sued large numbers of companies for "past infringement" of this patent. (Under U.S. patent laws, a patent owner can sue for "past infringement" up to six years before the filing of a lawsuit, so Princeton could theoretically have continued suing companies until December 2013.) As of March 2013, Princeton had suits pending in New York and Delaware against more than 55 companies. General Electric's involvement in the suit is unknown, although court records indicate that it assigned the patent to Princeton in 2009 and retains certain rights in the patent.[47]

Standards

Here are some examples of standards created by ISO/IEC JTC1 SC29 Working Group 1 (WG 1), which includes the Joint Photographic Experts Group and Joint Bi-level Image experts Group:

- JPEG (lossy and lossless): ITU-T T.81, ISO/IEC 10918-1

- JPEG extensions: ITU-T T.84

- JPEG-LS (lossless, improved): ITU-T T.87, ISO/IEC 14495-1

- JBIG (lossless, bi-level pictures, fax): ITU-T T.82, ISO/IEC 11544

- JBIG2 (bi-level pictures): ITU-T T.88, ISO/IEC 14492

- JPEG 2000: ITU-T T.800, ISO/IEC 15444-1

- JPEG 2000 extensions: ITU-T T.801

- JPEG XR (formerly called HD Photo prior to standardization) : ITU-T T.832, ISO/IEC 29199-2

Implementations

A very important implementation of a JPEG codec is the free programming library libjpeg of the Independent JPEG Group. It was first published in 1991 and was key for the success of the standard.[48] This library or a direct derivative of it is used in countless applications.

See also

| Wikimedia Commons has media related to JPEG compression. |

- Better Portable Graphics new format based on intra-frame encoding of the HEVC

- C-Cube an early implementer of JPEG in chip form

- Comparison of graphics file formats

- Comparison of layout engines (graphics)

- Deblocking filter (video), the similar deblocking methods could be applied to JPEG

- Design rule for Camera File system (DCF)

- File extensions

- Graphics editing program

- Image compression

- Image file formats

- Lenna, the traditional standard image used to test image processing algorithms

- Lossless Image Codec FELICS

- Motion JPEG

- PGF

- PNG

- WebP

References

- ^ "Definition of "JPEG"". Collins English Dictionary. Retrieved 23 May 2013.

- ^ MIME Type Detection in Internet Explorer: Uploaded MIME Types (msdn.microsoft.com)

- ^ JPEG File Layout and Format

- ^ ISO/IEC JTC 1/SC 29 (2009-05-07). "ISO/IEC JTC 1/SC 29/WG 1 – Coding of Still Pictures (SC 29/WG 1 Structure)". Retrieved 2009-11-11.

- ^ a b ISO/IEC JTC 1/SC 29. "Programme of Work, (Allocated to SC 29/WG 1)". Retrieved 2009-11-07.

- ^ ISO. "JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information". Retrieved 2009-11-11.

- ^ a b JPEG. "Joint Photographic Experts Group, JPEG Homepage". Retrieved 2009-11-08.

- ^ "T.81 : Information technology – Digital compression and coding of continuous-tone still images – Requirements and guidelines". Retrieved 2009-11-07.

- ^ William B. Pennebaker and Joan L. Mitchell (1993). JPEG still image data compression standard (3rd ed.). Springer. p. 291. ISBN 978-0-442-01272-4.

- ^ ISO. "JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information". Retrieved 2009-11-07.

- ^ JPEG (2009-04-24). "Press Release – 48th WG1 meeting, Maui, USA – JPEG XR enters FDIS status, JPEG File Interchange Format (JFIF) to be standardized as JPEG Part 5". Retrieved 2009-11-09.

- ^ "JPEG File Interchange Format (JFIF)". ECMA TR/98 1st ed. Ecma International. 2009. Retrieved 2011-08-01.

- ^ "Progressive Decoding Overview". Microsoft Developer Network. Microsoft. Retrieved 2012-03-23.

- ^ "JFIF File Format as PDF" (PDF).

- ^ Tom Lane (1999-03-29). "JPEG image compression FAQ". Retrieved 2007-09-11. (q. 14: "Why all the argument about file formats?")

- ^ "ISO/IEC 10918-1 : 1993(E) p.36".

- ^ Thomas G. Lane. "Advanced Features: Compression parameter selection". Using the IJG JPEG Library.

- ^ http://forum.doom9.org/showthread.php?p=184647#post184647

- ^ a b Phuc-Tue Le Dinh and Jacques Patry. Video compression artifacts and MPEG noise reduction. Video Imaging DesignLine. February 24, 2006. Retrieved May 28, 2009.

- ^ "3.9 mosquito noise: Form of edge busyness distortion sometimes associated with movement, characterized by moving artifacts and/or blotchy noise patterns superimposed over the objects (resembling a mosquito flying around a person's head and shoulders)." ITU-T Rec. P.930 (08/96) Principles of a reference impairment system for video

- ^ a b I. Bauermann and E. Steinbacj. Further Lossless Compression of JPEG Images. Proc. of Picture Coding Symposium (PCS 2004), San Francisco, USA, December 15–17, 2004.

- ^ a b N. Ponomarenko, K. Egiazarian, V. Lukin and J. Astola. Additional Lossless Compression of JPEG Images, Proc. of the 4th Intl. Symposium on Image and Signal Processing and Analysis (ISPA 2005), Zagreb, Croatia, pp.117–120, September 15–17, 2005.

- ^ a b c d M. Stirner and G. Seelmann. Improved Redundancy Reduction for JPEG Files. Proc. of Picture Coding Symposium (PCS 2007), Lisbon, Portugal, November 7–9, 2007

- ^ a b c Ichiro Matsuda, Yukio Nomoto, Kei Wakabayashi and Susumu Itoh. Lossless Re-encoding of JPEG images using block-adaptive intra prediction. Proceedings of the 16th European Signal Processing Conference (EUSIPCO 2008).

- ^ "Latest Binary Releases of packJPG: V2.3a". January 3, 2008.

- ^ J. Siragusa, D. C. Swift, "General Purpose Stereoscopic Data Descriptor", VRex, Inc., Elmsford, New York, USA, 1997.

- ^ Tim Kemp, JPS files

- ^ "Multi-Picture Format" (PDF). Retrieved 2011-05-29.

- ^ cybereality, MPO2Stereo: Convert Fujifilm MPO files to JPEG stereo pairs, mtbs3d, retrieved 12 January 2010

- ^ Alessandro Ortis, Sebastiano Battiato, A new fast matching method for adaptive compression of stereoscopic images, SPIE - Three-Dimensional Image Processing, Measurement (3DIPM), and Applications 2015, retrieved 30 April 2015

- ^ Alessandro Ortis, Francesco Rundo, Giuseppe Di Giore, Sebastiano Battiato, Adaptive Compression of Stereoscopic Images, International Conference on Image Analysis and Processing (ICIAP) 2013, retrieved 30 April 2015

- ^ "Concerning recent patent claims". Jpeg.org. 2002-07-19. Retrieved 2011-05-29.

- ^ JPEG and JPEG2000 – Between Patent Quarrel and Change of Technology at the Wayback Machine (archived August 17, 2004)

- ^ Kawamoto, Dawn (April 22, 2005). "Graphics patent suit fires back at Microsoft". CNET News. Retrieved 2009-01-28.

- ^ "Trademark Office Re-examines Forgent JPEG Patent". Publish.com. February 3, 2006. Retrieved 2009-01-28.

- ^ "USPTO: Broadest Claims Forgent Asserts Against JPEG Standard Invalid". Groklaw.net. May 26, 2006. Retrieved 2007-07-21.

- ^ "Coding System for Reducing Redundancy". Gauss.ffii.org. Retrieved 2011-05-29.

- ^ "JPEG Patent Claim Surrendered". Public Patent Foundation. November 2, 2006. Retrieved 2006-11-03.

- ^ Ex Parte Reexamination Certificate for U.S. Patent No. 5,253,341 Archived June 2, 2008 at the Wayback Machine

- ^ Workgroup. "Rozmanith: Using Software Patents to Silence Critics". Eupat.ffii.org. Retrieved 2011-05-29.

- ^ "A Bounty of $5,000 to Name Troll Tracker: Ray Niro Wants To Know Who Is saying All Those Nasty Things About Him". Law.com. Retrieved 2011-05-29.

- ^ Reimer, Jeremy (2008-02-05). "Hunting trolls: USPTO asked to reexamine broad image patent". Arstechnica.com. Retrieved 2011-05-29.

- ^ U.S. Patent Office – Granting Reexamination on 5,253,341 C1

- ^ "Judge Puts JPEG Patent On Ice". Techdirt.com. 2008-04-30. Retrieved 2011-05-29.

- ^ "JPEG Patent's Single Claim Rejected (And Smacked Down For Good Measure)". Techdirt.com. 2008-08-01. Retrieved 2011-05-29.

- ^ Workgroup. "Princeton Digital Image Corporation Home Page". Retrieved 2013-05-01.

- ^ Workgroup. "Article on Princeton Court Ruling Regarding GE License Agreement". Retrieved 2013-05-01.

- ^ Jpeg.org

External links

- CompressPic.com for Loss less Image Compression

- JPEG Standard (JPEG ISO/IEC 10918-1 ITU-T Recommendation T.81) at W3.org

- Official Joint Photographic Experts Group site

- JFIF File Format at W3.org

- JPEG viewer in 250 lines of easy to understand python code

- Wotsit.org's entry on the JPEG format

- Example images over the full range of quantization levels from 1 to 100 at visengi.com

- Public domain JPEG compressor in a single C++ source file, along with a matching decompressor at code.google.com

- Example of .JPG file decoding

- Jpeg Decoder Open Source Code , Copyright (C) 1995–1997, Thomas G. Lane.

- JPEG compression and decompression on GPU.

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||

&-2&-4&1&1&0&0&0\\-3&1&5&-1&-1&0&0&0\\-3&1&2&-1&0&0&0&0\\1&0&0&0&0&0&0&0\<add_contents_exp><m=5 date=20151002>

Wikipedia preview

出典(authority):フリー百科事典『ウィキペディア(Wikipedia)』「2015/10/02 21:43:40」(JST)

wiki ja

花の写真をJPEGで圧縮したもの。左から右にいくに従って損失率が大きくなるようにしてある。 |

|

| 拡張子 | .jpeg, .jpg, .jpe |

|---|---|

| MIME Type | image/jpeg |

| タイプコード | JPEG |

| UTI | public.jpeg |

| マジックナンバー | \xff\xd8 |

| 開発者 | Joint Photographic Experts Group |

| 包含物 | 静止画像、テキスト |

| テンプレートを表示 | |

JPEG(ジェイペグ、Joint Photographic Experts Group)は、コンピュータなどで扱われる静止画像のデジタルデータを圧縮する方式のひとつ。またはそれをつくった組織 (ISO/IEC JTC 1/SC 29/WG 1, Joint Photographic Experts Group) の略称であり、アクロニムである。JPEG方式による画像ファイルにつけられる拡張子はjpgが多く使われるほか、jpeg等が使われる場合もある。

一般的に非可逆圧縮の画像フォーマットとして知られている。可逆圧縮形式もサポートしているが、可逆圧縮は特許などの関係でほとんど利用されていない。

標準では、特定の種類の画像の正式なフォーマットがなく、JFIF形式(マジックナンバー上は、6バイト目から始まる形式部分にJFIFと記されているもの)が事実上の標準ファイルフォーマットとなっている。動画を記録可能にしたものにMotion JPEGがある。立体視 (3D) 用には、ステレオJPEG (JPS) フォーマット[1]がある。

デジタルカメラの記録方式としてもよく利用されているが、デジタルカメラでは様々なオプション機能を使い、JFIFを拡張したExchangeable image file format (EXIF) などのフォーマットとしてまとめられている。

目次

- 1 技術の詳細

- 1.1 符号化方式

- 1.2 ノイズ

- 2 規格書

- 3 インターネットでの普及とその背景

- 4 出典

- 5 関連項目

- 6 外部リンク

技術の詳細

ここでは、一般に用いられる非可逆圧縮の方式について説明する。なお、JPEGの可逆圧縮には非可逆圧縮とは全く別の技術が用いられている。JPEG 2000ではどちらにも同じ技術を用いる。

符号化方式

JPEGでは、画像を固定サイズ(8×8画素)のブロックに分割し、そのブロック単位で、離散コサイン変換 (DCT: discrete cosine transform) を用いて、空間領域から周波数領域へ変換する。この変換自体では情報量は削減されない。変換されたデータは、量子化によって情報量を落としてから、ハフマン符号によるエントロピー符号化がなされ圧縮が行われる。エントロピー符号化とは、データの生起確率の高低に応じて異なる長さの符号を割り当てることで圧縮を行うものである。

DCTによる周波数領域への変換では、変換そのものでは情報量は削減されないが、低周波数成分にエネルギーが集まることを利用して、量子化による情報量削減と、エントロピー符号化での圧縮率向上を図っている。普通の画像をそのまま量子化してしまうと大きな画質劣化が生じるが、重要な成分が局所的に集められた後では元の画像の性質を残したまま量子化が可能である。また、低周波数成分に集中するという形で、データに偏りが生じると、エントロピー符号化の圧縮率も向上する。なお、JPEGでは、量子化マトリックスと呼ばれる係数表を用いて、低周波数成分に比べて高周波数成分でより粗い量子化を行うのが一般的である。

エントロピー符号化ではハフマン符号を用いる。ハフマン符号は処理が単純であるため演算量が少なく、さらにその符号セットが想定する、理想的なデータが入力された場合には極めて高い圧縮率を実現する。符号セットにあわないデータが入力された場合、逆に圧縮率は下がってしまう。この問題を解消するため後継のJPEG 2000では算術符号が採用された。

なお、周波数領域への変換前の画像フォーマットの色成分の数は1~4の間で選択でき、各色成分が何であるかを決める表色系も自由に選択することができる。そのため色成分が1つのグレースケール、色成分が3つのRGB及びYCbCr、色成分が4つのYMCKなどのデータのどれも用いることができる。しかし、表色系の規定がない上にどの表色系であるかを示す情報もないことは互換性に大きな問題となる。そのためJFIF形式では、YCbCr表色系を用いること、さらに成分の順序はY、Cb、Crの順であることを規定している。各色成分の空間的な間引きをあらわすサンプリングファクタについては、各々の色成分について水平方向、垂直方向独立に定めることができ、一般的な形式の4:4:4、4:2:2、4:2:0、4:1:1のいずれもが選択可能である。

ノイズ

JPEGではブロック単位で変換を行うため、圧縮率を上げるとブロックの境界にブロックノイズと呼ばれるノイズが生じる。

また、周波数領域への変換においては、低周波成分に画像のエネルギーが集中するため、高周波成分のエネルギーは小さくなる。このため量子化を行うと高周波成分はゼロに落ち、無くなってしまう。すると画像の急峻な変化を十分に表現できないため、エッジ周辺では、ある一点に集まる蚊にたとえモスキートノイズと呼ばれるノイズが生じる。

色差を間引く為、特に赤には弱く、赤の部分でノイズが発生しやすい。

規格書

規格は、合同のグループで作られたため国際標準化機構 (ISO)、国際電気標準会議 (IEC) と国際電気通信連合 (ITU) の双方から出されている。それにならい、日本工業規格 (JIS) でも規格化されている。

- ITU-T勧告 T.81

- ISO/IEC 10918-1:1994

- JIS X 4301

インターネットでの普及とその背景

JPEGは、Webの普及黎明期において、Webブラウザ標準の画像フォーマットとして、GIFと双璧を成していた。

JPEGの符号化方式の特性から、同じ色が広い範囲に広がることの多いCG絵画であっても、画像そのもののサイズに比例してファイルサイズが大きくなる。このため、ダイヤルアップ接続等、一般ユーザーの末端接続がナローバンドだった時代には、データ転送量を少なくするという観点から、CG絵画はGIF、デジタルスチル写真にはJPEG、という使い分けが存在していた。

1999年、GIFの特許問題によって起こったGIF排斥運動(当該項目を参照)で、GIFから、JPEGや、新たにフリーのフォーマットとして開発されたPNGに移行する流れになった。PNGは当時のブラウザではプラグインを導入しないと表示できないなどの問題を抱えているケースが多かったため、GIF画像をJPEGによって置き換えられるケースが多かった。

現在は、GIFのLZWの特許が失効し、フリーな画像フォーマットとして再び使えるようになり、PNGもほぼ全てのブラウザでサポートされるようになった。この2つの画像フォーマットは現在もインターネット上でよく使われている。

出典

- ^ http://www.nvidia.co.jp/object/3d-vision-3d-pictures-jp-old.html

関連項目

- 派生規格

-

- CPKTフォーマット

- JPEG-HDR

- Lossless JPEG

- ポストJPEG規格

-

- JPEG 2000

- JPEG XR (HD Photo)

- WebP

- データ圧縮

- JBIG

- 安田浩

- プログレッシブJPEG

- Portable Network Graphics (PNG)

- Graphics Interchange Format (GIF)

- libjpeg

外部リンク

- JPEG公式サイト(英語。日本語ページは2007年12月現在未完成)

- W3C Overview of JPEG(英語。JPEGおよびJFIFの仕様書あり)

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

wiki en

|

A photo of a cat with the compression rate decreasing, and hence quality increasing, from left to right.

|

|

| Filename extension | .jpg, .jpeg, .jpe |

|---|---|

| Internet media type | image/jpeg |

| Type code | JPEG |

| Uniform Type Identifier (UTI) | public.jpeg |

| Magic number | ff d8 ff |

| Developed by | Joint Photographic Experts Group |

| Type of format | lossy image format |

| Standard | ISO/IEC 10918, ITU-T T.81, ITU-T T.83, ITU-T T.84, ITU-T T.86 |

| Website | www |

JPEG (/ˈdʒeɪpɛɡ/ JAY-peg)[1] is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality.[citation needed]

JPEG compression is used in a number of image file formats. JPEG/Exif is the most common image format used by digital cameras and other photographic image capture devices; along with JPEG/JFIF, it is the most common format for storing and transmitting photographic images on the World Wide Web.[citation needed] These format variations are often not distinguished, and are simply called JPEG.

The term "JPEG" is an acronym for the Joint Photographic Experts Group, which created the standard. The MIME media type for JPEG is image/jpeg, except in older Internet Explorer versions, which provides a MIME type of image/pjpeg when uploading JPEG images.[2] JPEG files usually have a filename extension of .jpg or .jpeg.

JPEG/JFIF supports a maximum image size of 65535×65535 pixels,[3] hence up to 4 gigapixels (for an aspect ratio of 1:1).

Contents

- 1 The JPEG standard

- 2 Typical usage

- 3 JPEG compression

- 3.1 Lossless editing

- 4 JPEG files

- 4.1 JPEG filename extensions

- 4.2 Color profile

- 5 Syntax and structure

- 6 JPEG codec example

- 6.1 Encoding

- 6.1.1 Color space transformation

- 6.1.2 Downsampling

- 6.1.3 Block splitting

- 6.1.4 Discrete cosine transform

- 6.1.5 Quantization

- 6.1.6 Entropy coding

- 6.2 Compression ratio and artifacts

- 6.3 Decoding

- 6.4 Required precision

- 6.1 Encoding

- 7 Effects of JPEG compression

- 7.1 Sample photographs

- 8 Lossless further compression

- 9 Derived formats for stereoscopic 3D

- 9.1 JPEG Stereoscopic

- 9.2 JPEG Multi-Picture Format

- 10 Patent issues

- 11 Standards

- 12 Implementations

- 13 See also

- 14 References

- 15 External links

The JPEG standard

"JPEG" stands for Joint Photographic Experts Group, the name of the committee that created the JPEG standard and also other still picture coding standards. The "Joint" stood for ISO TC97 WG8 and CCITT SGVIII. In 1987 ISO TC 97 became ISO/IEC JTC1 and in 1992 CCITT became ITU-T. Currently on the JTC1 side JPEG is one of two sub-groups of ISO/IEC Joint Technical Committee 1, Subcommittee 29, Working Group 1 (ISO/IEC JTC 1/SC 29/WG 1) – titled as Coding of still pictures.[4][5][6] On the ITU-T side ITU-T SG16 is the respective body. The original JPEG group was organized in 1986,[7] issuing the first JPEG standard in 1992, which was approved in September 1992 as ITU-T Recommendation T.81[8] and in 1994 as ISO/IEC 10918-1.

The JPEG standard specifies the codec, which defines how an image is compressed into a stream of bytes and decompressed back into an image, but not the file format used to contain that stream.[9] The Exif and JFIF standards define the commonly used file formats for interchange of JPEG-compressed images.

JPEG standards are formally named as Information technology – Digital compression and coding of continuous-tone still images. ISO/IEC 10918 consists of the following parts:

| Part | ISO/IEC standard | ITU-T Rec. | First public release date | Latest amendment | Title | Description |

|---|---|---|---|---|---|---|

| Part 1 | ISO/IEC 10918-1:1994 | T.81 (09/92) | 000000001992-09-18-0000Sep 18, 1992 | Requirements and guidelines | ||

| Part 2 | ISO/IEC 10918-2:1995 | T.83 (11/94) | 000000001994-11-11-0000Nov 11, 1994 | Compliance testing | rules and checks for software conformance (to Part 1) | |

| Part 3 | ISO/IEC 10918-3:1997 | T.84 (07/96) | 000000001996-07-03-0000Jul 3, 1996 | 000000001999-04-01-0000Apr 1, 1999 | Extensions | set of extensions to improve the Part 1, including the SPIFF file format |

| Part 4 | ISO/IEC 10918-4:1999 | T.86 (06/98) | 000000001998-06-18-0000Jun 18, 1998 | 000000002012-06-29-0000Jun 29, 2012 | Registration of JPEG profiles, SPIFF profiles, SPIFF tags, SPIFF colour spaces, APPn markers, SPIFF compression types and Registration Authorities (REGAUT) | methods for registering some of the parameters used to extend JPEG |

| Part 5 | ISO/IEC 10918-5:2013 | T.871 (05/11) | 000000002011-05-14-0000May 14, 2011 | JPEG File Interchange Format (JFIF) | A popular format which has been the de facto file format for images encoded by the JPEG standard. In 2009, the JPEG Committee formally established an Ad Hoc Group to standardize JFIF as JPEG Part 5.[11] | |

| Part 6 | ISO/IEC 10918-6:2013 | T.872 (06/12) | 000000002012-06-01-0000Jun 2012 | Application to printing systems | Specifies a subset of features and application tools for the interchange of images encoded according to the ISO/IEC 10918-1 for printing. |

Ecma International TR/98 specifies the JPEG File Interchange Format (JFIF); the first edition was published in June 2009.[12]

Typical usage

The JPEG compression algorithm is at its best on photographs and paintings of realistic scenes with smooth variations of tone and color. For web usage, where the amount of data used for an image is important, JPEG is very popular. JPEG/Exif is also the most common format saved by digital cameras.

On the other hand, JPEG may not be as well suited for line drawings and other textual or iconic graphics, where the sharp contrasts between adjacent pixels can cause noticeable artifacts. Such images may be better saved in a lossless graphics format such as TIFF, GIF, PNG, or a raw image format. The JPEG standard actually includes a lossless coding mode, but that mode is not supported in most products.

As the typical use of JPEG is a lossy compression method, which somewhat reduces the image fidelity, it should not be used in scenarios where the exact reproduction of the data is required (such as some scientific and medical imaging applications and certain technical image processing work).

JPEG is also not well suited to files that will undergo multiple edits, as some image quality will usually be lost each time the image is decompressed and recompressed, particularly if the image is cropped or shifted, or if encoding parameters are changed – see digital generation loss for details. To avoid this, an image that is being modified or may be modified in the future can be saved in a lossless format, with a copy exported as JPEG for distribution.

JPEG compression

JPEG uses a lossy form of compression based on the discrete cosine transform (DCT). This mathematical operation converts each frame/field of the video source from the spatial (2D) domain into the frequency domain (a.k.a. transform domain.) A perceptual model based loosely on the human psychovisual system discards high-frequency information, i.e. sharp transitions in intensity, and color hue. In the transform domain, the process of reducing information is called quantization. In simpler terms, quantization is a method for optimally reducing a large number scale (with different occurrences of each number) into a smaller one, and the transform-domain is a convenient representation of the image because the high-frequency coefficients, which contribute less to the overall picture than other coefficients, are characteristically small-values with high compressibility. The quantized coefficients are then sequenced and losslessly packed into the output bitstream. Nearly all software implementations of JPEG permit user control over the compression-ratio (as well as other optional parameters), allowing the user to trade off picture-quality for smaller file size. In embedded applications (such as miniDV, which uses a similar DCT-compression scheme), the parameters are pre-selected and fixed for the application.

The compression method is usually lossy, meaning that some original image information is lost and cannot be restored, possibly affecting image quality. There is an optional lossless mode defined in the JPEG standard. However, this mode is not widely supported in products.